Structured Extraction from Patient Intake Form with LLM

In this blog, we will show you how to use OpenAI API to extract structured data from patient intake forms with different formats, like PDF, DOCX, etc.

You can find the full code here 🤗.

If this tutorial is helpful, please give CocoIndex on Github a star ⭐.

Video Tutorial

Prerequisites

Install Postgres

If you don't have Postgres installed, please refer to the installation guide.

Google Drive as alternative source (optional)

If you plan to load patient intake forms from Google Drive, you can refer to this example for more details.

Extract Structured Data from Google Drive

1. Define output schema

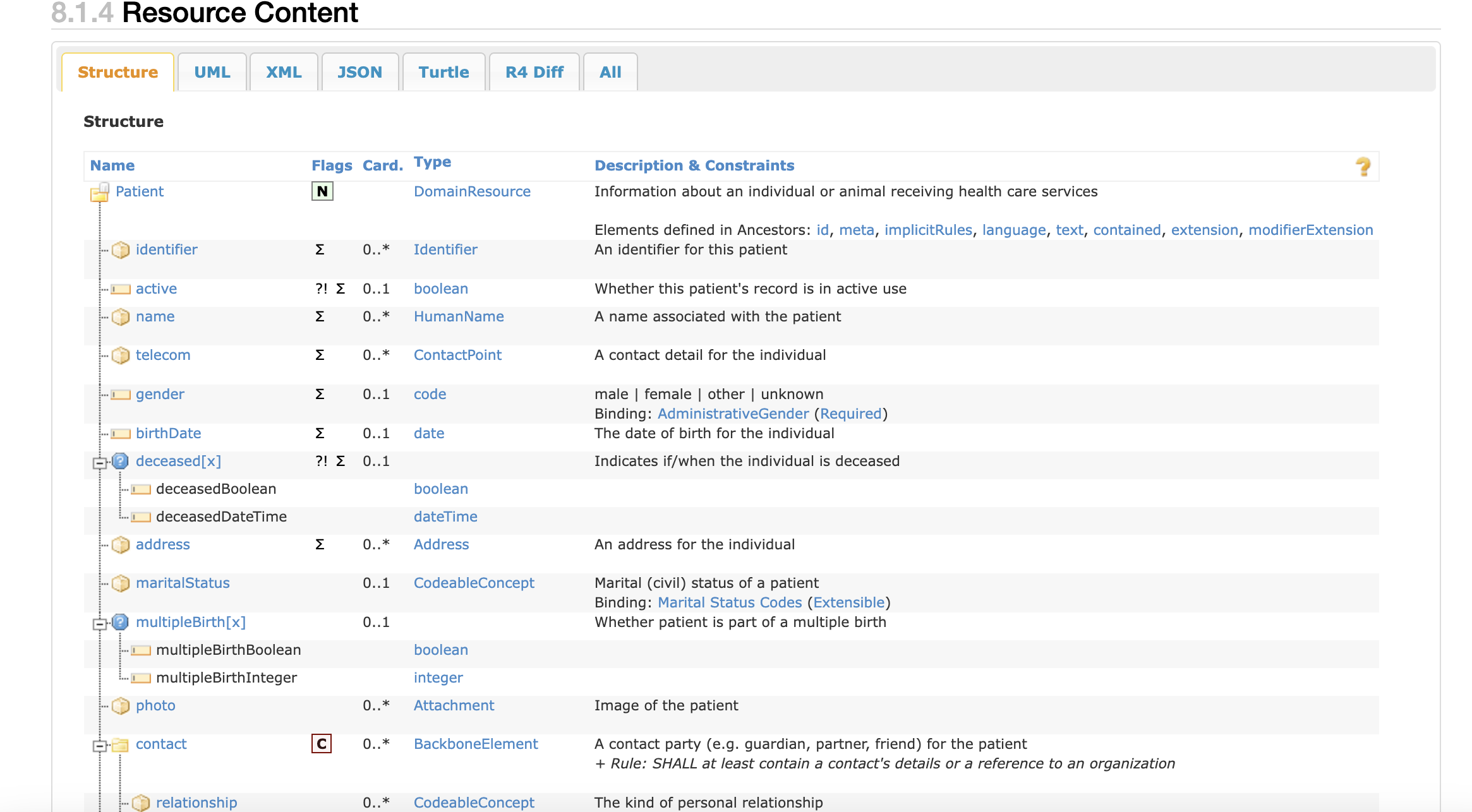

We are going to define the patient info schema for structured extraction. One of the best examples to define a patient info schema is probably following the FHIR standard - Patient Resource.

In this tutorial, we'll define a simplified schema for patient information extraction:

@dataclasses.dataclass

class Contact:

name: str

phone: str

relationship: str

@dataclasses.dataclass

class Address:

street: str

city: str

state: str

zip_code: str

@dataclasses.dataclass

class Pharmacy:

name: str

phone: str

address: Address

@dataclasses.dataclass

class Insurance:

provider: str

policy_number: str

group_number: str | None

policyholder_name: str

relationship_to_patient: str

@dataclasses.dataclass

class Condition:

name: str

diagnosed: bool

@dataclasses.dataclass

class Medication:

name: str

dosage: str

@dataclasses.dataclass

class Allergy:

name: str

@dataclasses.dataclass

class Surgery:

name: str

date: str

@dataclasses.dataclass

class Patient:

name: str

dob: datetime.date

gender: str

address: Address

phone: str

email: str

preferred_contact_method: str

emergency_contact: Contact

insurance: Insurance | None

reason_for_visit: str

symptoms_duration: str

past_conditions: list[Condition]

current_medications: list[Medication]

allergies: list[Allergy]

surgeries: list[Surgery]

occupation: str | None

pharmacy: Pharmacy | None

consent_given: bool

consent_date: datetime.date | None

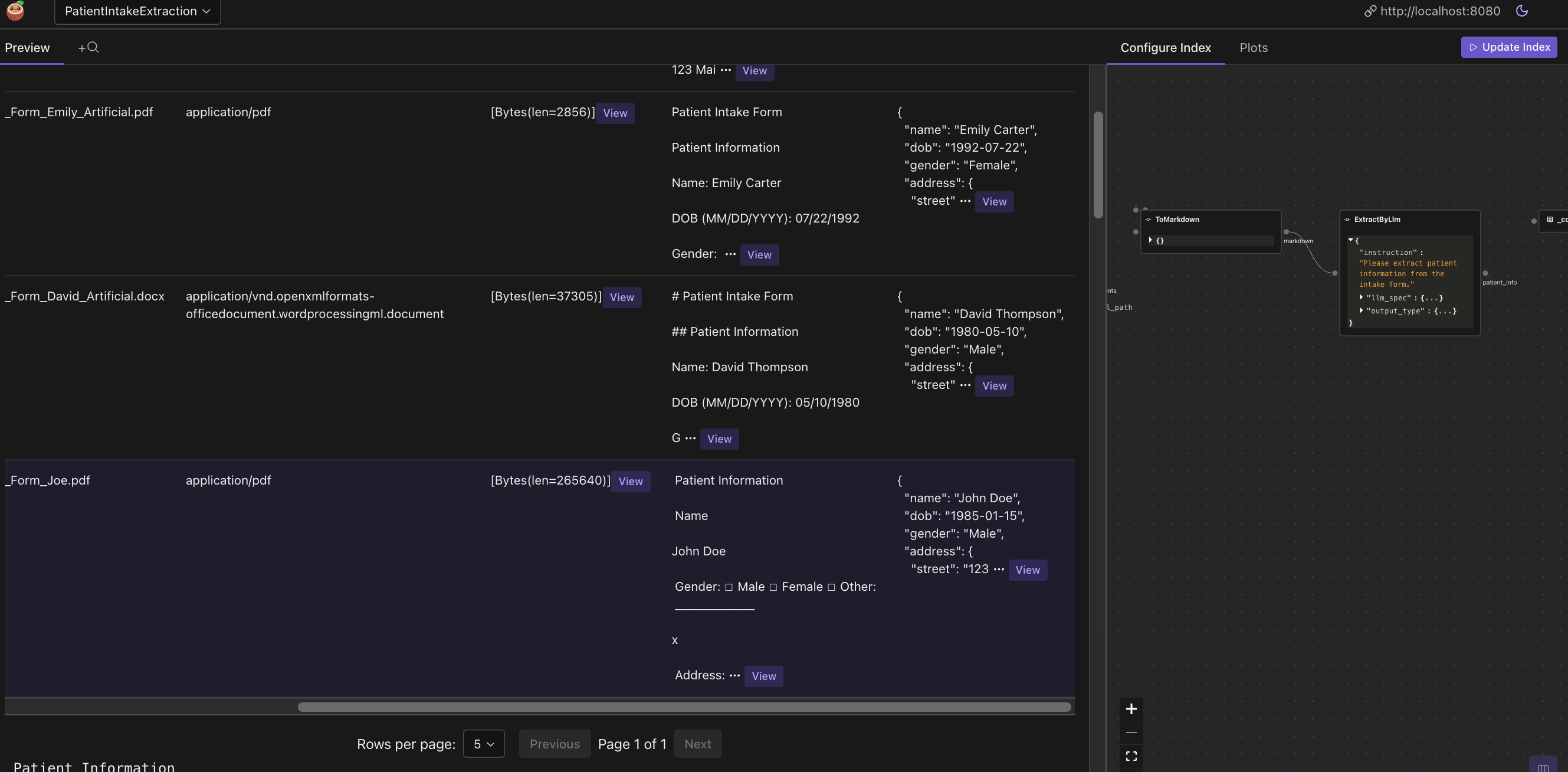

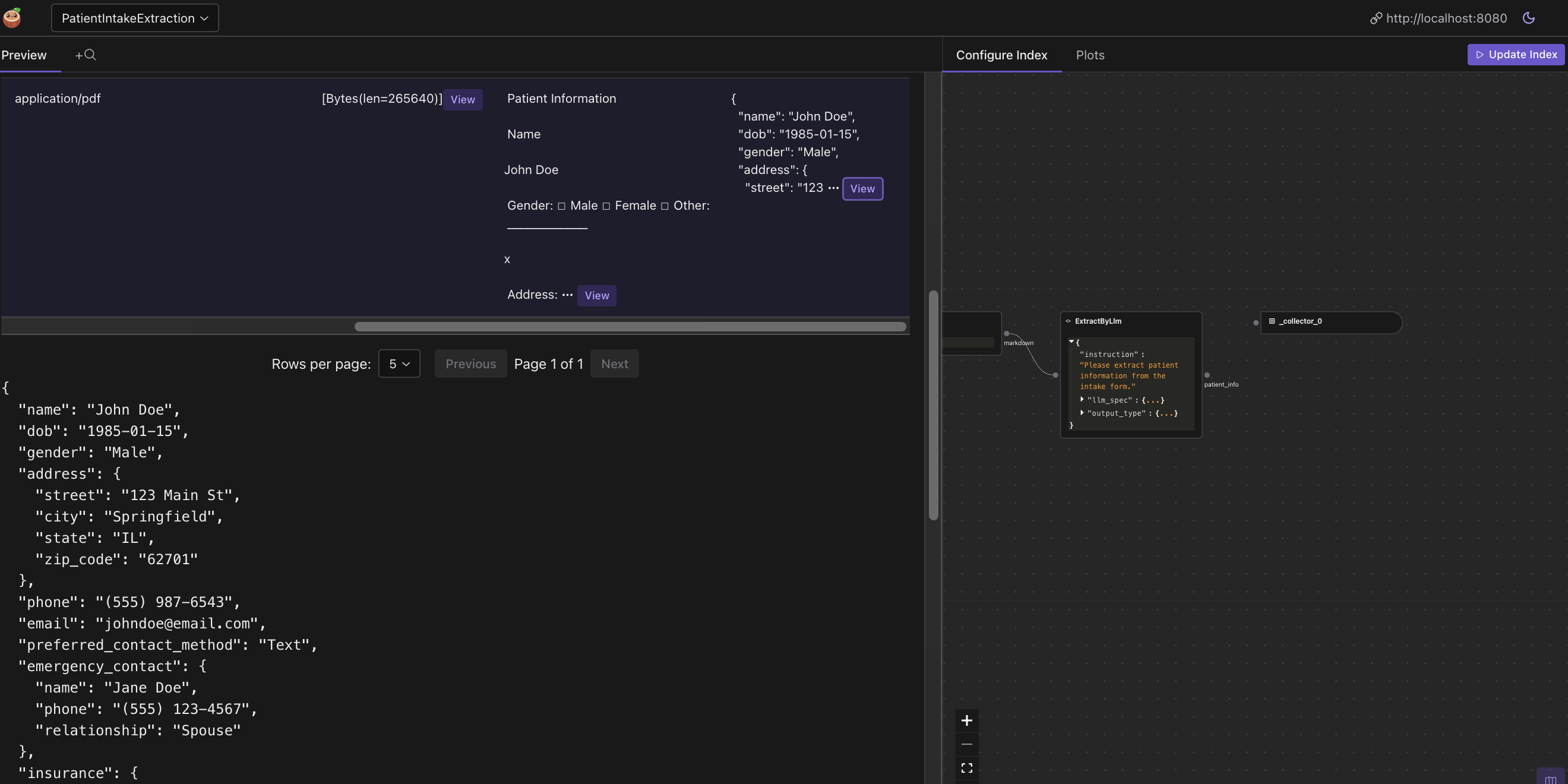

2. Define CocoIndex Flow

Let's define the CocoIndex flow to extract the structured data from patient intake forms.

-

Add Google Drive as a source

@cocoindex.flow_def(name="PatientIntakeExtraction")

def patient_intake_extraction_flow(flow_builder: cocoindex.FlowBuilder, data_scope: cocoindex.DataScope):

"""

Define a flow that extracts patient information from intake forms.

"""

credential_path = os.environ["GOOGLE_SERVICE_ACCOUNT_CREDENTIAL"]

root_folder_ids = os.environ["GOOGLE_DRIVE_ROOT_FOLDER_IDS"].split(",")

data_scope["documents"] = flow_builder.add_source(

cocoindex.sources.GoogleDrive(

service_account_credential_path=credential_path,

root_folder_ids=root_folder_ids,

binary=True))

patients_index = data_scope.add_collector()flow_builder.add_sourcewill create a table with a few sub fields. See documentation here. -

Parse documents with different formats to Markdown

Define a custom function to parse documents in any format to Markdown. Here we use MarkItDown to convert the file to Markdown. It also provides options to parse by LLM, like

gpt-4o. At present, MarkItDown supports: PDF, Word, Excel, Images (EXIF metadata and OCR), etc. You could find its documentation here.class ToMarkdown(cocoindex.op.FunctionSpec):

"""Convert a document to markdown."""

@cocoindex.op.executor_class(gpu=True, cache=True, behavior_version=1)

class ToMarkdownExecutor:

"""Executor for ToMarkdown."""

spec: ToMarkdown

_converter: MarkItDown

def prepare(self):

client = OpenAI()

self._converter = MarkItDown(llm_client=client, llm_model="gpt-4o")

def __call__(self, content: bytes, filename: str) -> str:

suffix = os.path.splitext(filename)[1]

with tempfile.NamedTemporaryFile(delete=True, suffix=suffix) as temp_file:

temp_file.write(content)

temp_file.flush()

text = self._converter.convert(temp_file.name).text_content

return textNext we plug it into the data flow.

with data_scope["documents"].row() as doc:

doc["markdown"] = doc["content"].transform(ToMarkdown(), filename=doc["filename"]) -

Extract structured data from Markdown CocoIndex provides built-in functions (e.g.

ExtractByLlm) that process data using LLMs. In this example, we usegpt-4ofrom OpenAI to extract structured data from the Markdown. We also provide built-in support for Ollama, which allows you to run LLM models on your local machine easily.with data_scope["documents"].row() as doc:

doc["patient_info"] = doc["markdown"].transform(

cocoindex.functions.ExtractByLlm(

llm_spec=cocoindex.LlmSpec(

api_type=cocoindex.LlmApiType.OPENAI, model="gpt-4o"),

output_type=Patient,

instruction="Please extract patient information from the intake form."))

patients_index.collect(

filename=doc["filename"],

patient_info=doc["patient_info"],

)After the extraction, we just need to cherrypick anything we like from the output by calling the collect method on the collector defined above.

-

Export the extracted data to a table.

patients_index.export(

"patients",

cocoindex.storages.Postgres(table_name="patients_info"),

primary_key_fields=["filename"],

)

Evaluate

🎉 Now you are all set with the extraction! For mission-critical use cases, it is important to evaluate the quality of the extraction. CocoIndex supports a simple way to evaluate the extraction. There may be some fancier ways to evaluate the extraction, but for now, we'll use a simple approach.

-

Dump the extracted data to YAML files.

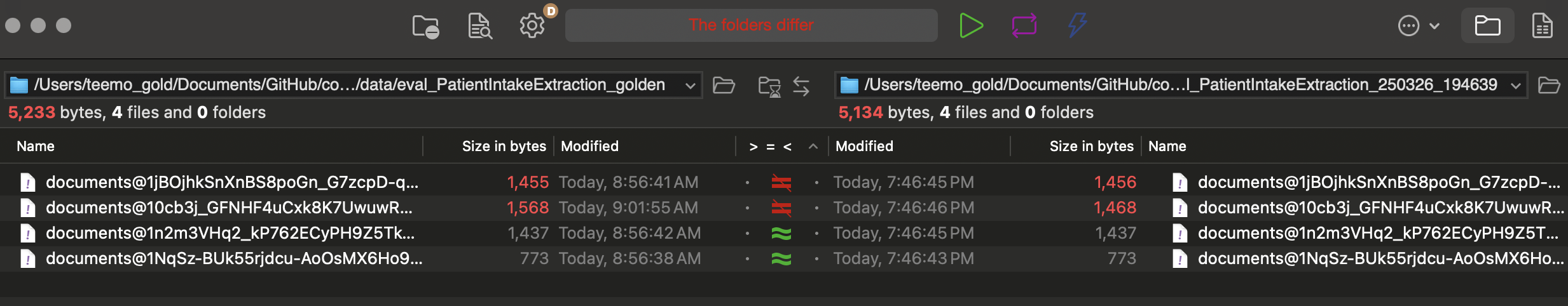

python3 main.py cocoindex evaluateIt dumps what should be indexed to files under a directory. Using my example data sources, it looks like the golden files with a timestamp on the directory name.

-

Compare the extracted data with golden files. We created a directory with golden files for each patient intake form. You can find them here.

You can run the following command to see the diff:

diff -r data/eval_PatientIntakeExtraction_golden data/eval_PatientIntakeExtraction_outputI used a tool called DirEqual for mac. We also recommend Meld for Linux and Windows.

A diff from DirEqual looks like this:

.

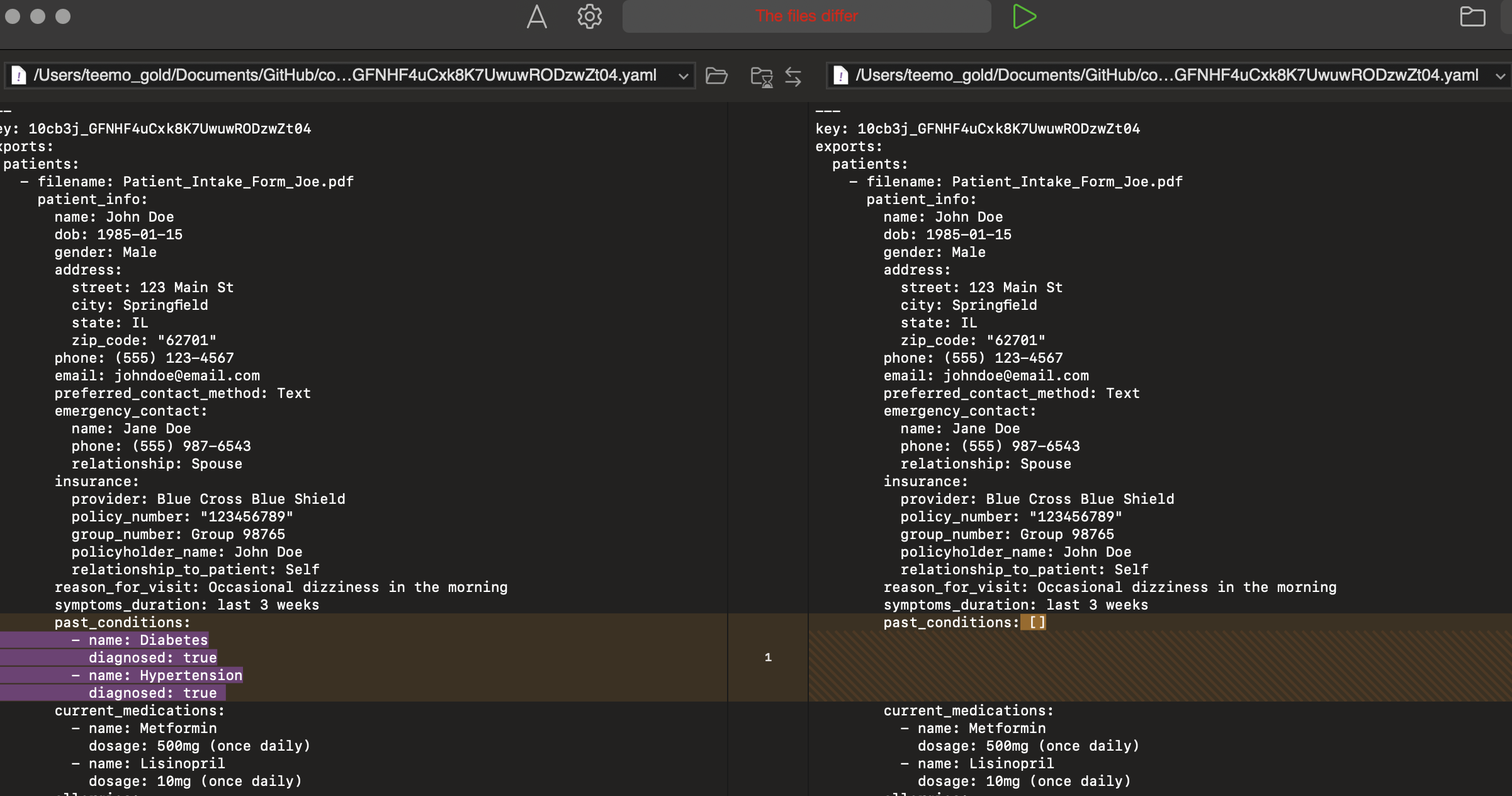

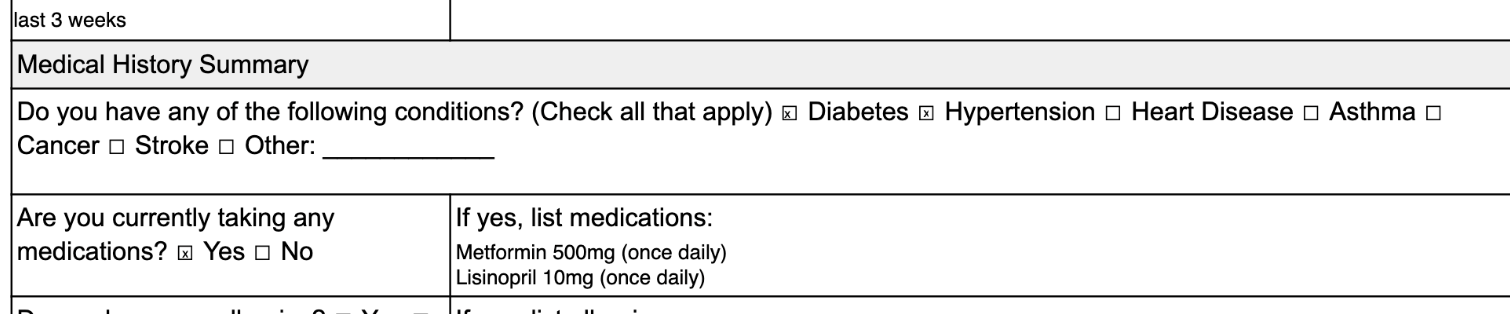

.And double click on any row to see file level diff. In my case, there's missing

conditionforPatient_Intake_Form_Joe.pdffile. .

.

Troubleshooting

My original golden file for this record is this one.

We will troubleshoot in two steps:

- Convert to Markdown

- Extract structured data from Markdown

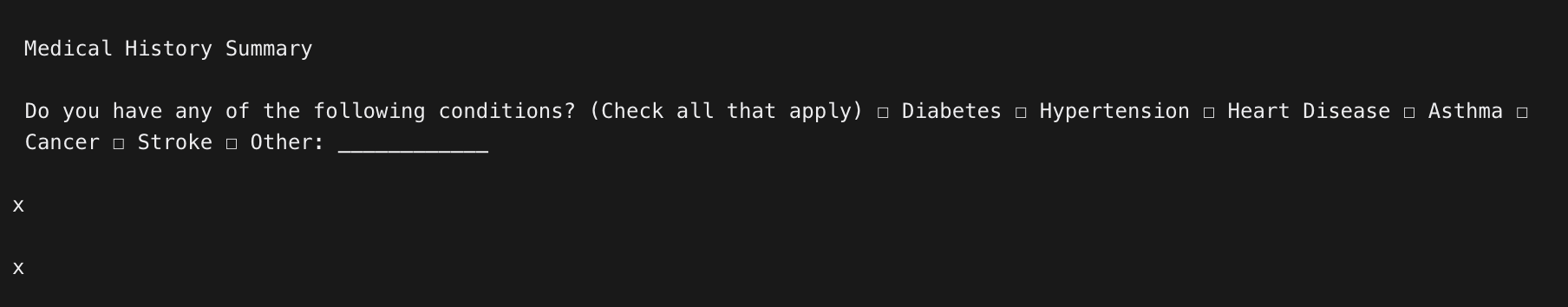

In this tutorial, we'll show how to use CocoInsight to troubleshoot this issue.

cocoindex server -ci main

Go to https://cocoindex.io/cocoinsight. You could see an interactive UI to explore the data.

Click on the markdown column for Patient_Intake_Form_Joe.pdf, you could see the Markdown content.

It is not well understood by LLM extraction. So here we could try a few different models with the Markdown converter/LLM to iterate and see if we can get better results, or needs manual correction.

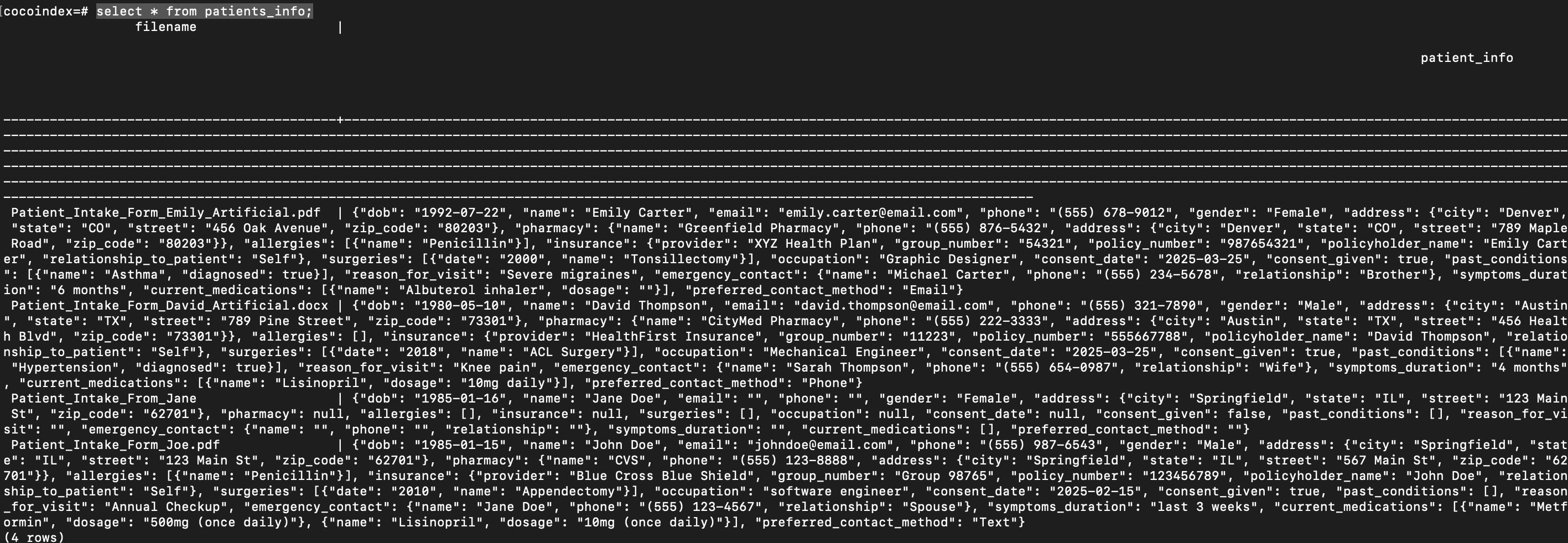

Query the extracted data

Run following commands to setup and update the index.

cocoindex setup main

cocoindex update main

You'll see the index updates state in the terminal.

After the index is built, you have a table with the name patients_info. You can query it at any time, e.g., start a Postgres shell:

psql postgres://cocoindex:cocoindex@localhost/cocoindex

The run:

select * from patients_info;

You could see the patients_info table.

You could also use CocoInsight mentioned above as debug tool to explore the data.

Support us

We are constantly improving, and more features and examples are coming soon. If this tutorial is helpful, please give us a star ⭐ at GitHub to help us grow.