CocoIndex Changelog 2025-03-20

We're excited to share our progress with you! We'll be publishing these updates weekly, but since this is our first one, we're covering highlights from the last two weeks.

We had 9 releases in the last 2 weeks over 100+ PRs merged (Yes, we shipped a lot!), here are the highlights.

We're excited to share our progress with you! We'll be publishing these updates weekly, but since this is our first one, we're covering highlights from the last two weeks.

We had 9 releases in the last 2 weeks over 100+ PRs merged (Yes, we shipped a lot!), here are the highlights.

Thank you for your support and warm coconut hugs to all our contributors! 🤗 Let's dive into what we've been building...

📖 Documentation

We've added a lot of new material to back you up, see our shinning documentation site for cocoindex.

We've received a lot of feedbacks from our community, and we're excited to see you're using cocoindex in your projects! And we've added onboarding videos to help you get started.

✨ LLM Support

We've added support for LLM to extract structured information from text, see the documentation for LLM Support.

CocoIndex provides powerful built-in functions like ExtractByLlm that leverage Large Language Models to process your data. To use these functions, you'll need to configure an LLM Spec that defines which LLM integration you want to use (such as OpenAI, Ollama), specify the model, and set any additional parameters needed for your specific use case.

For Ollama, you can pull models from the Ollama Model Library which offers a variety of open-source models inluding latest published models like Gemma 3.0. Simply use the ollama pull command followed by the model name (e.g., ollama pull llama3.2).

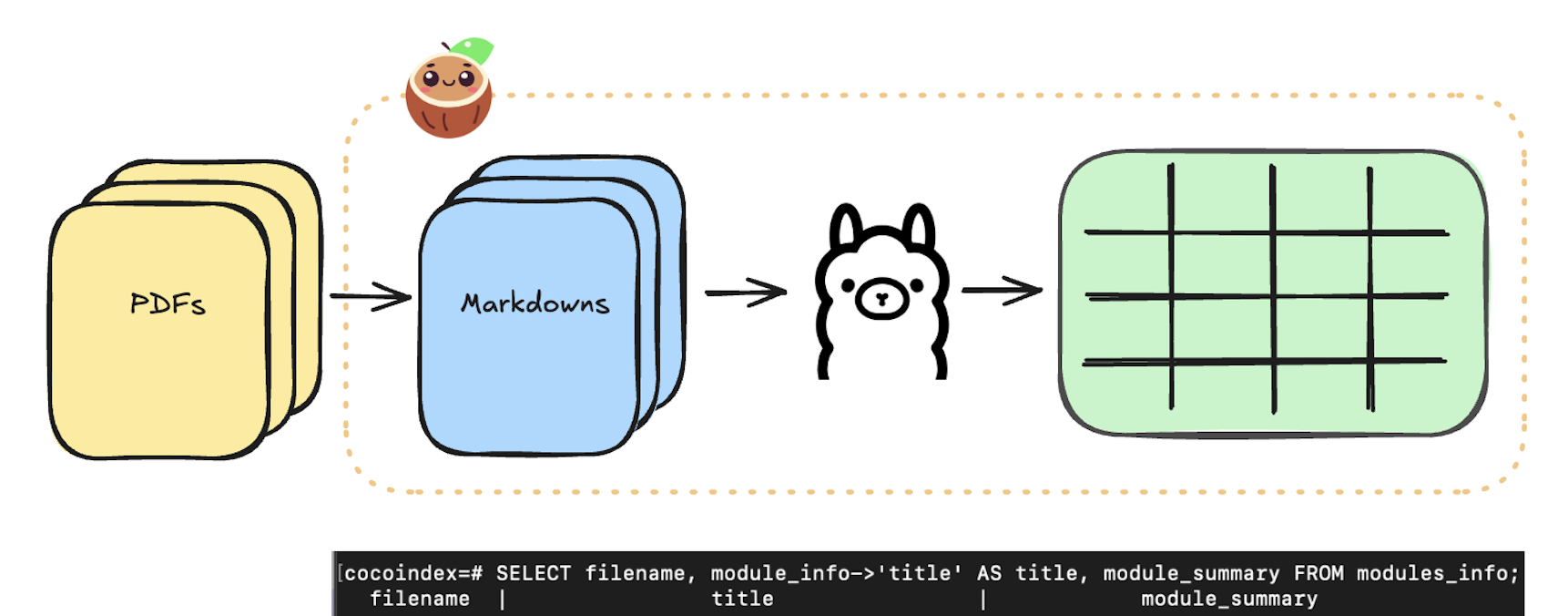

We published a blog post on step by step guide to use Ollama for structured extraction from a PDF. See the full code example here.

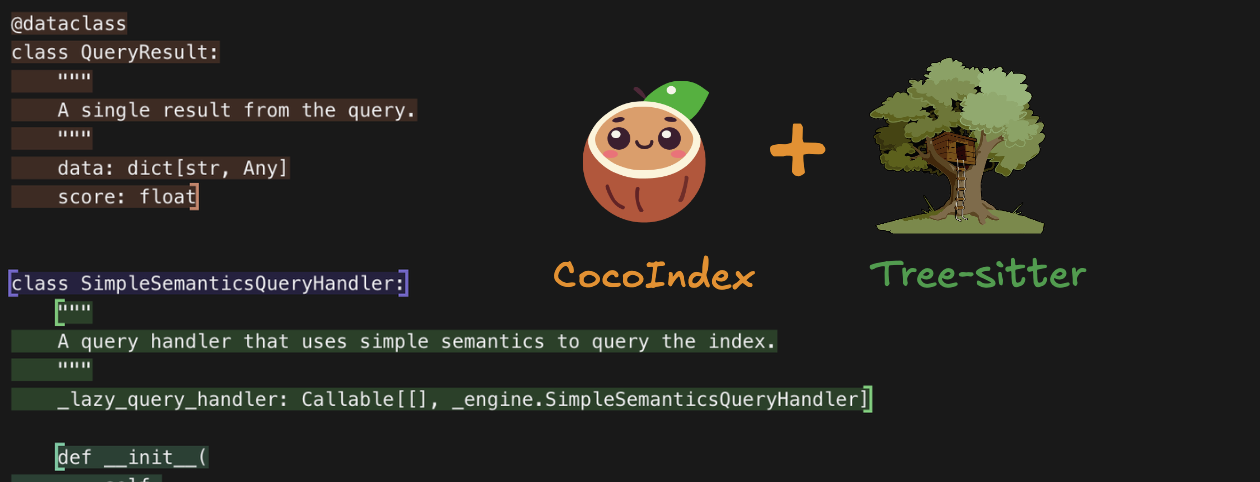

📦 Native support on chunking for codebases

We've added native support for chunking codebases with Tree-sitter integration. This allows for more intelligent code splitting based on syntax structure rather than arbitrary line breaks.

CocoIndex leverages Tree-sitter to parse code and extract syntax trees for various programming languages. This enables syntactically coherent chunking that preserves the context and structure of your code, making your RAG system more effective for code retrieval.

We've added support for indexing codebases, with a step by step guide on how to index a codebase. Read the full blog post here and see the full code example here.

🛠️ Support composite types in Python custom functions

In CocoIndex, all data processed by the flow have a type determined when the flow is defined, before any actual data is processed at runtime. Read the full description and documetation for data types here. This makes schema of data processed by CocoIndex clear, and easily determine the schema of your index.

These types were already supported in core, and data source and functions like SplitRecursively already produced these data types.

Now we start to support representing struct and collection types in Python, so that they can be used in following ways:

1. Used as input/output for your custom functions

For example, We can define a custom function

@cocoindex.op.function()

def summarize_module(module_info: ModuleInfo) -> ModuleSummary:

"""Summarize a Python module."""

return ModuleSummary(

num_classes=len(module_info.classes),

num_methods=len(module_info.methods),

)

Here ModuleInfo and ModuleSummary are custom dataclass types.

For a full example, see here.

2. For builtin functions taking custom types

For an extraction example, see here.

doc["module_info"] = doc["markdown"].transform(

cocoindex.functions.ExtractByLlm(

llm_spec=cocoindex.LlmSpec(

api_type=cocoindex.LlmApiType.OLLAMA,

# See the full list of models: https://ollama.com/library

model="llama3.2"

),

# Replace by this spec below, to use OpenAI API model instead of ollama

# llm_spec=cocoindex.LlmSpec(

# api_type=cocoindex.LlmApiType.OPENAI, model="gpt-4o"),

output_type=ModuleInfo,

instruction="Please extract Python module information from the manual."))

🧩 Make custom functions easier to define

You can directly define your custom function with a function decorator. It fits into simple cases that the function doesn't need to take additional configurations and extra setup logic.

See documentation here.

Example usage below. See full code example here:

@cocoindex.op.function()

def summarize_module(module_info: ModuleInfo) -> ModuleSummary:

"""Summarize a Python module."""

return ModuleSummary(

num_classes=len(module_info.classes),

num_methods=len(module_info.methods),

)

⚡ Support optimizations by caching

Custom functions can take a paramter cache. When True, the executor will cache the result of the function for reuse during reprocessing. We recommend to set this to True for any function that is computationally intensive.

Output will be reused if all these unchanged: spec (if exists), input data, behavior of the function. For this purpose, a behavior_version needs to be provided, and should increase on behavior changes.

For example, this enables cache for a standalone function, see full code example here:

@cocoindex.op.executor_class(gpu=True, cache=True, behavior_version=1)

class PdfToMarkdownExecutor:

"""Executor for PdfToMarkdown."""

...

See the documentation here.

Thanks to the community 🤗🎉!

- @eltociear made their first contribution in #68

- @wykrrr made their first contribution in #112

More upgrades

v0.1.12 Basic Google Drive support, chunking improvement

- Support for more languages in

SplitRecursively. - Skip chunks without alphanumeric characters.

- Add

GoogleDrivesource.

v0.1.11 Native support on chunking for codebase, @function decorator

- Add support for more languages in

SplitRecursively. - Add

@functiondecorator for simple functions.

v0.1.9 Improve native support for chunking, Core robustness

- Use OpArgsResolver to make resolve for multiple input args easier.

- Extract Rust - Python binding logic into a module for reuse

- Robust support for passing constant to functions.

- Update

SplitRecursivelyto take language and chunk sizes dynamically. - Update the documents about

SplitRecursivelyand transform arguments.

v0.1.8 LLM Integration

- Added support for extracting structured information from text by LLM

- ExtractByLlm function

- LLM integration

- Manual Extraction example.

v0.1.7 Support more data types, Core robustness

- Support Python->Rust Struct/Table type bindings.

- Skip update table if type changed without storage type change.

- Use List instead of Table for python list type

- Serializing/deserializing struct to JSON object for Postgres.

- Support type annotations for both List and Table in Python SDK.

- Support any data type in Rust->Python.

- Refactor logic to parse Python type - to support dataclass type binding.

- Support ... | None (or Optional[...]) when analyzing type.

- Support creating dataclasses in Rust->Python value bindings.

- Use custom code to convert Rust values to Python so bytes are bytes

v0.1.6 Serialization fix

- Bunch of serialization fixes in Python SDK.

v0.1.5 Core, Performance, Python SDK, Query Metrics

- Correct similarity score for cosine and inner product.

- Leverage

pythonizeto bypass JSON serialization in Python to Rust. - Support struct and table types in Python to Rust type encoding.

v0.1.4 Core, Performance, Custom Function, Improve SentenceTransformerEmbed

- Avoid clone when reading data from cache.

- Custom function documentation: enable cache, links to examples.

- SentenceTransformerEmbed support additional args passed to the library.

- Polish document for quickstart.

- Bug fix: for evaluate API, use position in source instead of schema.

- Enable evaluation cache in the readonly evaluation API.

v0.1.3 Core & performance

- Add cache utilities and plumbing through the evaluator.

- Tolerate JSON null in memoization_info field in DB.

- Implement a generic fingerprinter for computing cache key.

- Evaluator starts to read/write cache if enabled

- A bunch of bug fixes / cleanups for cache feature.

- Output detailed error when indexing failed for a row.

- Backward compatible to old-format fingerprint already in database.

- Enable cache in SentenceTransformerEmbed and PdfToMarkdown.