Customizable Data Indexing Pipelines

CocoIndex is the world's first open-source engine that supports both custom transformation logic and incremental processing specialized for data indexing. So, what is custom transformation logic?

Index-as-a-service (or RAG-as-service), tends to package a predesigned service and expose two endpoints to users - one to configure the source, and an API to read from the index. Many predefined pipelines for unstructured documents do this. The requirements are fairly simple: parse PDFs, perform some chunking and embedding, and dump into vector stores. This works well if your requirements are simple and primarily focused on document parsing.

We've talked to many developers across various verticals that require data indexing, and being able to customize logic is essential for high-quality data retrieval. For example:

- Basic choices for pipeline components

- which parser for different files?

- how to chunk files (documents with structure normally have different optimal chunking strategies)?

- which embedding model? which vector database?

- What should the pipeline do?

- Is it a simple text embedding"?

- Is it building a knowledge graph?

- Should it perform simple summarization for each source for retrieval without chunking?

- What additional work is needed to improve pipeline quality?

- Do we need deduplication?

- Do we need to look up different sources to enrich our data?

- Do we need to reconcile and align multiple documents?

Here we'll walk through some examples of the topology of index pipelines, and we can explore more in the future!

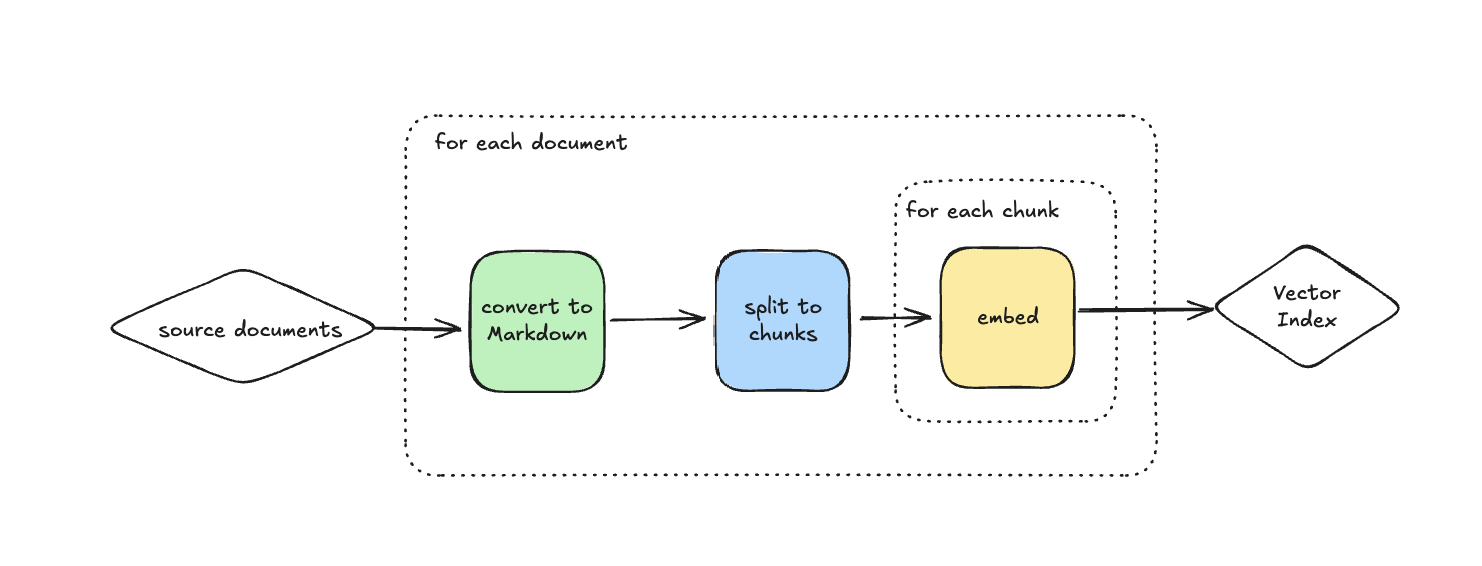

Basic embedding

In this example, we do the following:

- Read from sources, for example, a list of PDFs

- For each source file, parse it to markdown with a PDF parser. There are lots of choices out there: Llama Parse, Unstructured IO, Gemini, DeepSeek, etc.

- Chunk all the markdown files. This is a way to break text into smaller chunks, or units, to help organize and process information. There are many options here: flat chunks, hierarchical chunks, secondary chunks, and many publications in this area. There are also special chunking strategies for different verticals - for code, tools like Tree-sitter can help parse and chunk based on syntax. Normally the best choice is tied to your document structure and requirements.

- Perform embedding for each chunk. There are lots of great choices: Voyage, models like OpenAI etc.

- Collect the embeddings in vector stores. The embedding is normally attached with metadata, for example, which file this embedding belongs to, etc. There are many great choices for vector stores: Chromadb, Milvus, Pinecone and many databases now support vector indexing, for example PostgreSQL (pgvector) and MongoDB.

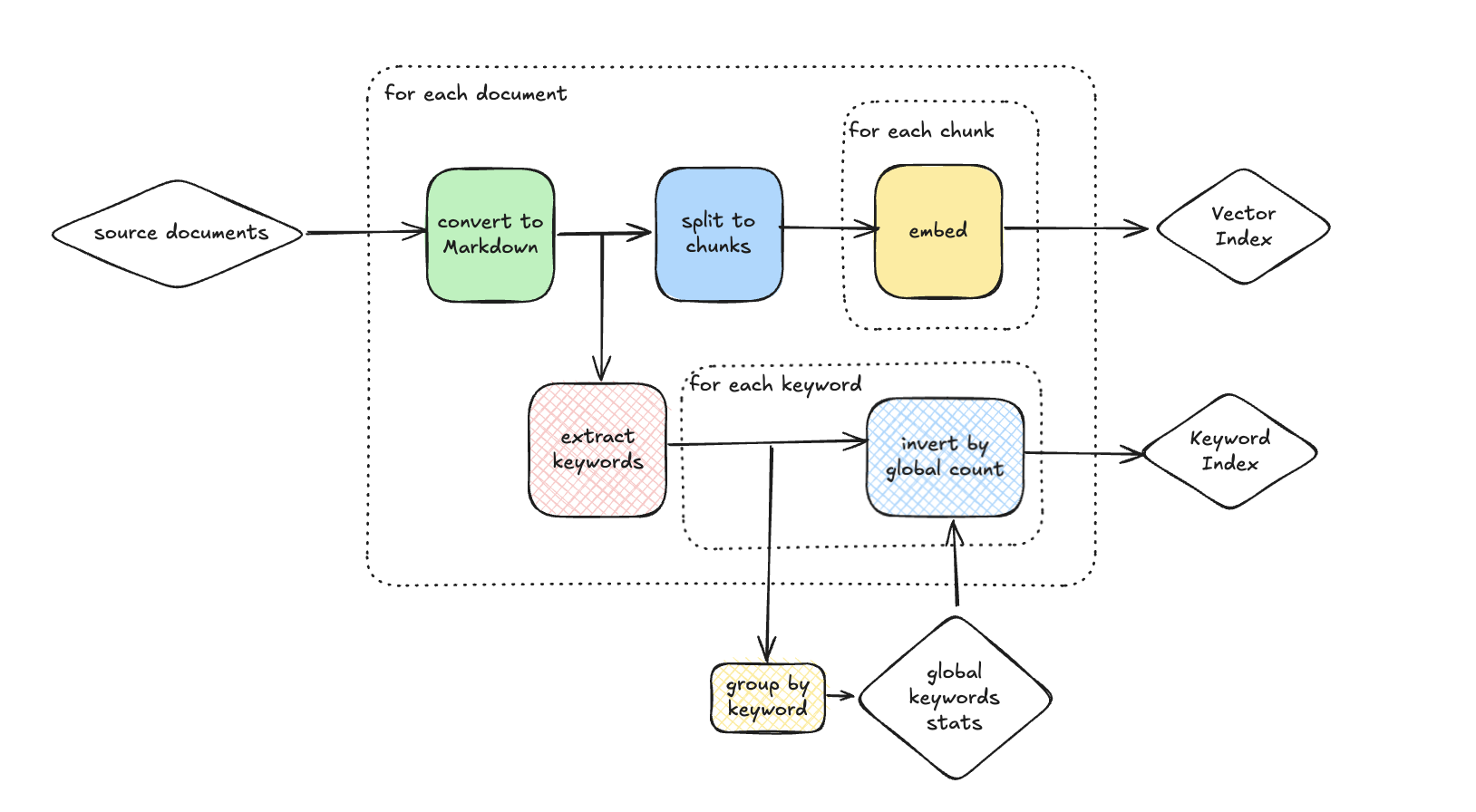

A combination of TF-IDF and vector search

Anthropic has published a great article about Contextual Retrieval that suggests the combination of vector-based search and TF-IDF.

The way to think about a data flow for the pipeline is:

In addition to prepare the vector embedding as basic embedding example above, after the source data parsing, we can do the following:

- For each document, extract keywords from it with its frequency.

- Across all documents, group by keywords and sum up their frequencies, putting into an internal storage.

- For each document each keyword, calculate the TF-IDF score using two inputs: frequency in the current document, and the sum of frequency across all documents. Store keyword and along with TF-IDF score keyword (if above a certain threshold) to a keyword index.

On query time, we can query both the vector index and the keyword index, and combine results from both.

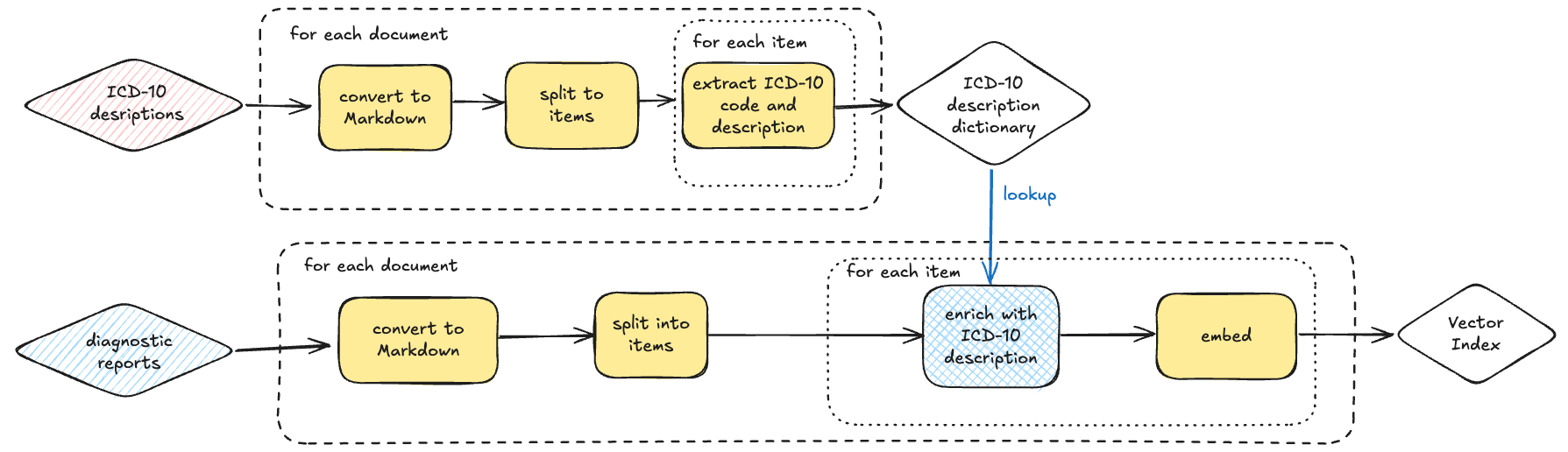

Simple data lookup/enrichment example

Sometimes, you want to enrich your data with metadata looked up from other sources. For example, if we want to create index on diagnostic reports, which uses ICD-10 (International Classification of Diseases Version 10) codes to describe diseases, we can have a pipeline like this:

In this example, we do the following:

-

On the first path, build an ICD-10 dictionary by

- For each ICD-10 description document, convert it to markdown with a PDF parser.

- Split into items.

- For each item, extract the ICD-10 code and description, and collect into a storage.

-

On the second path, for each report

- Parse it to markdown with a PDF parser.

- Split into items.

- For each item, lookup the ICD-10 dictionary prepared above and enrich the item with the descritions for ICD-10 codes.

Now we have a vector index, built based on diagnostic reports enriched with ICD-10 descriptions.