CocoIndex Changelog 2025-07-07

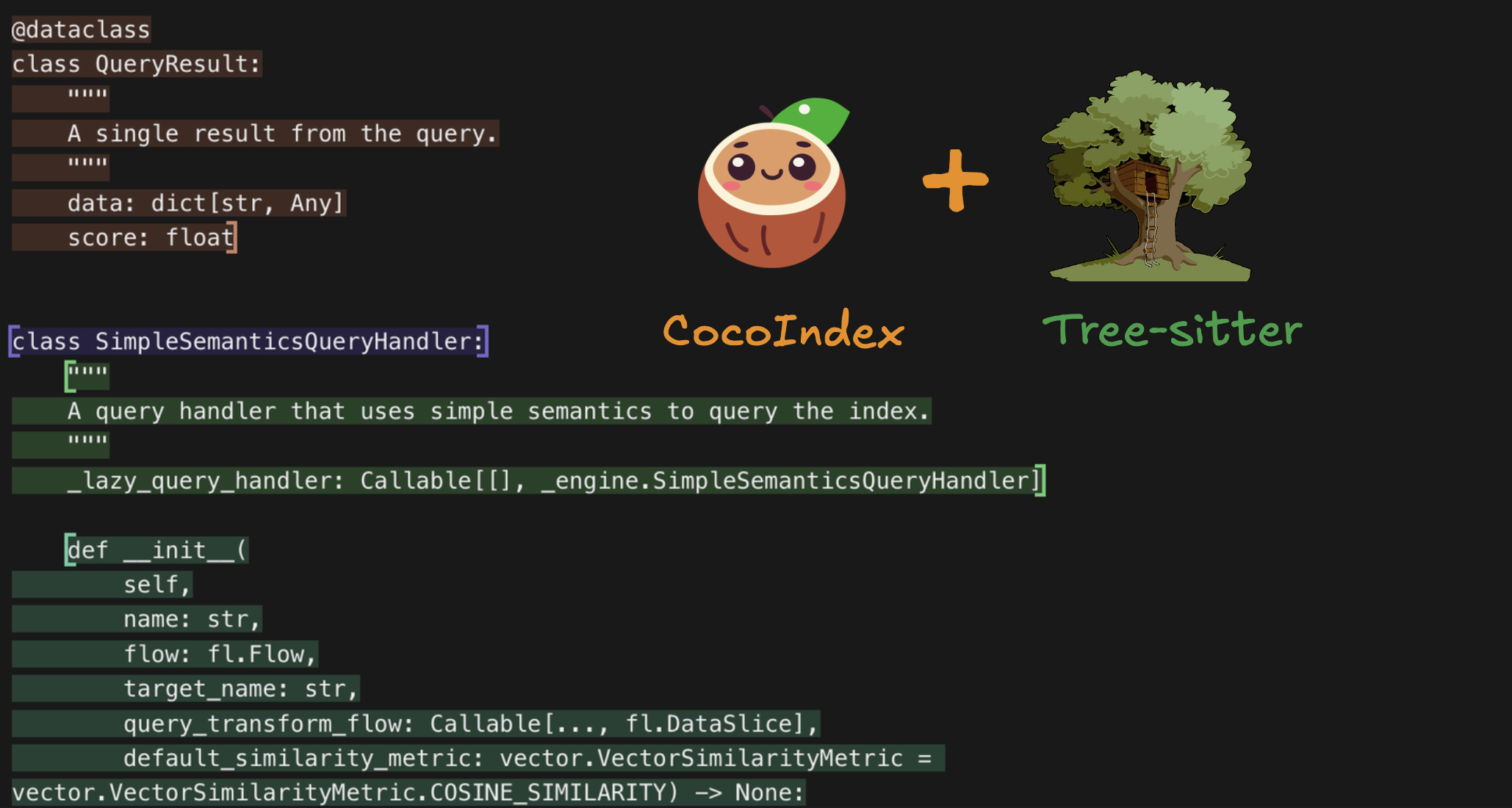

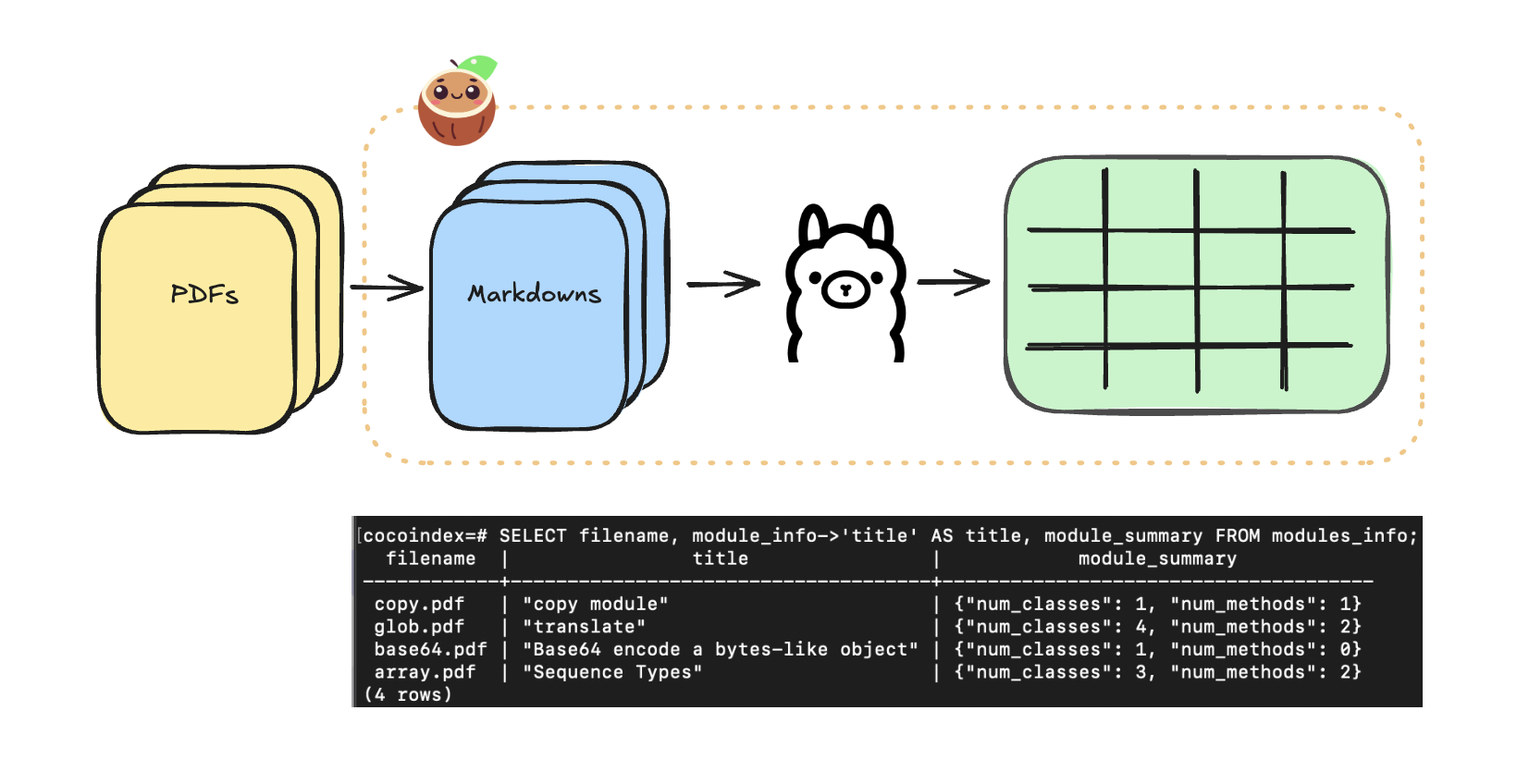

In the past weeks, we've added support for in-process API and convenient CLI options for setup / drop, native support for EmbedText as building block, major improvement to support codebase indexing and many core improvements over 10+ releases.

We're excited to share our progress with you! We'll be publishing these updates weekly, but since this is our first one, we're covering highlights from the last two weeks.

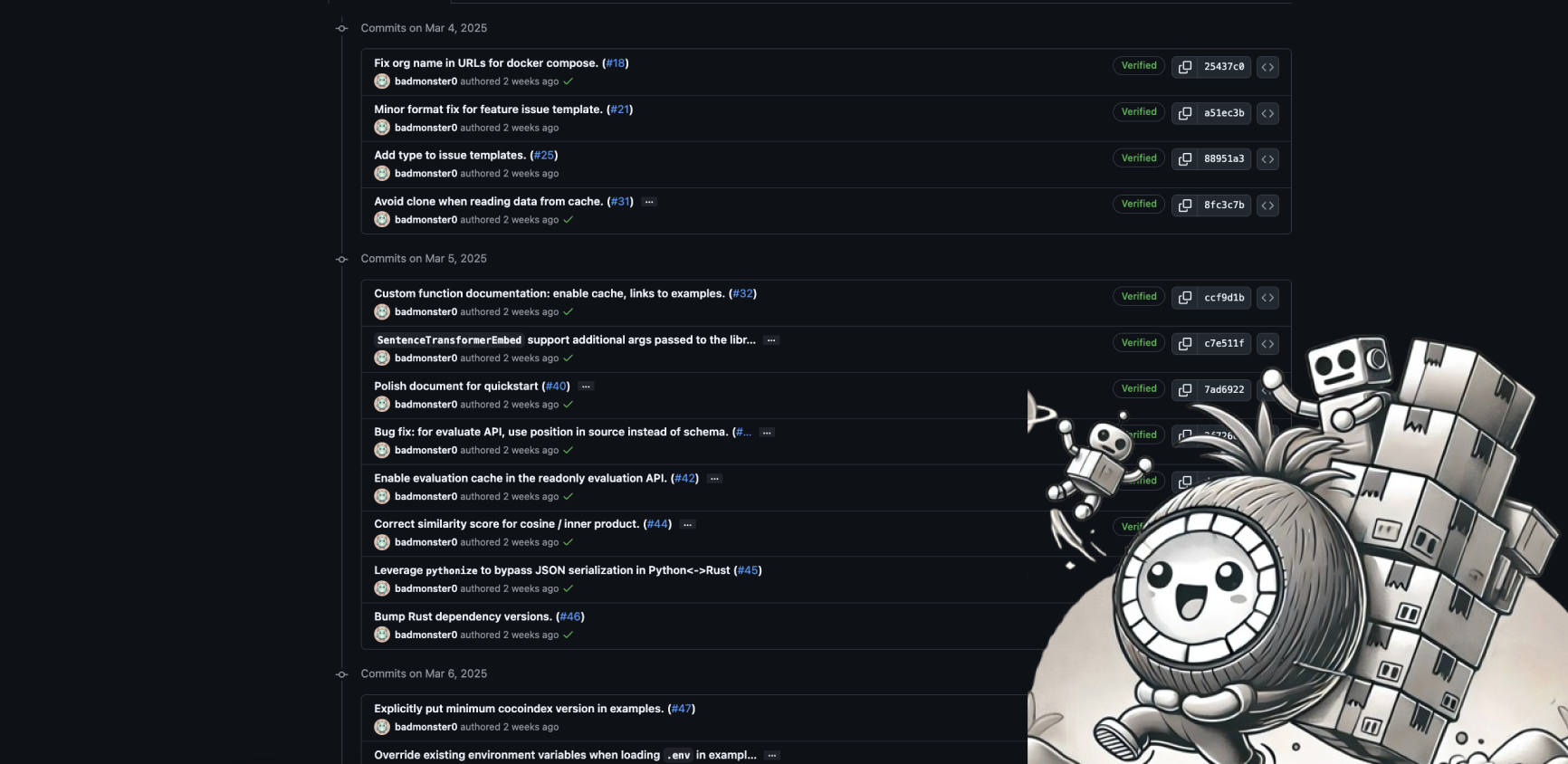

We had 9 releases in the last 2 weeks over

We're excited to share our progress with you! We'll be publishing these updates weekly, but since this is our first one, we're covering highlights from the last two weeks.

We had 9 releases in the last 2 weeks over