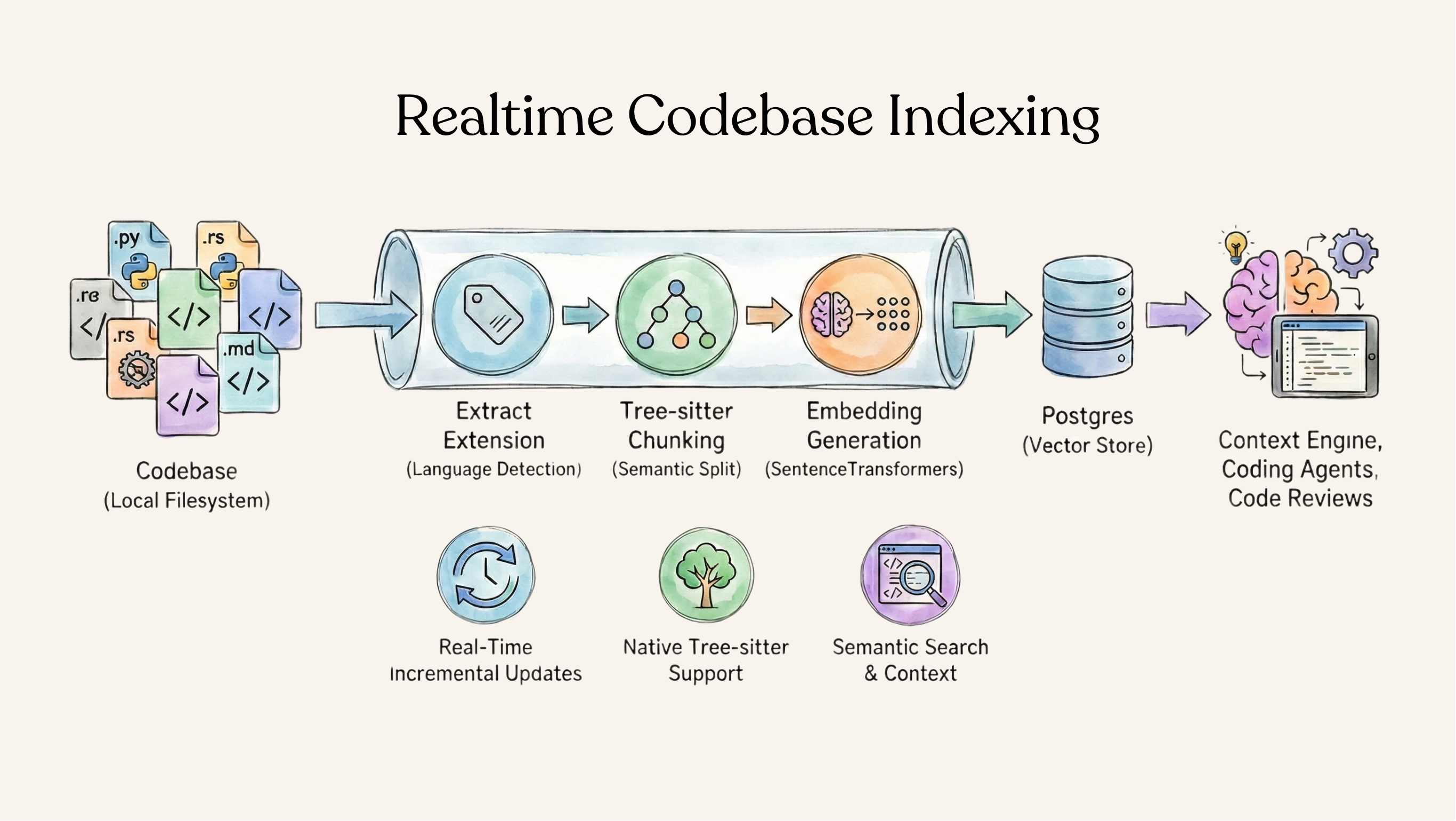

Real-time Codebase Indexing

Overview

In this tutorial, we will build codebase index. CocoIndex provides built-in support for codebase chunking, with native Tree-sitter support. It works with large codebases, and can be updated in near real-time with incremental processing - only reprocess what's changed.

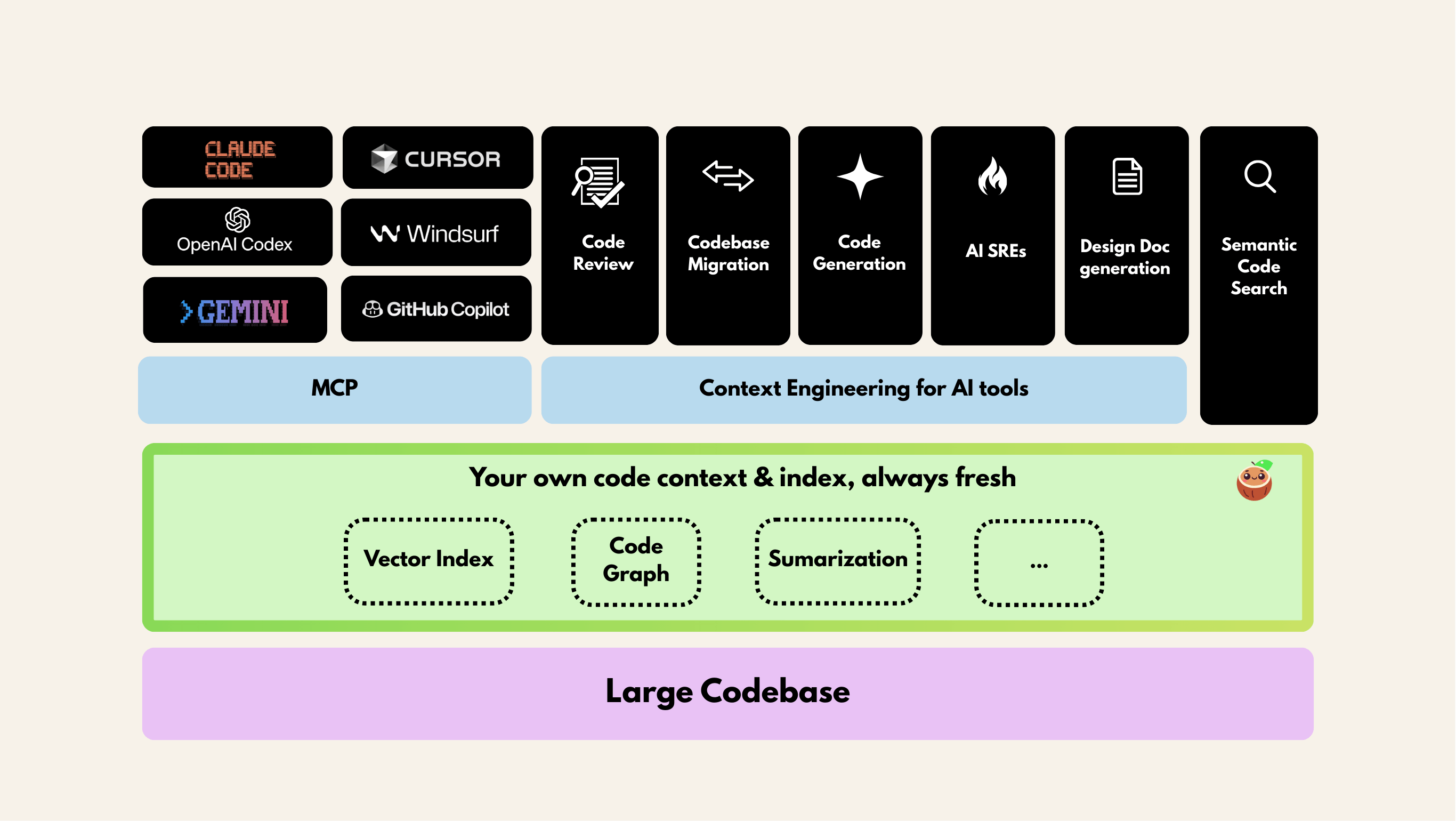

Use Cases

A wide range of applications can be built with an effective codebase index that is always up-to-date.

- Semantic code context for AI coding agents like Claude, Codex, Gemini CLI.

- MCP for code editors such as Cursor, Windsurf, and VSCode.

- Context-aware code search applications—semantic code search, natural language code retrieval.

- Context for code review agents—AI code review, automated code analysis, code quality checks, pull request summarization.

- Automated code refactoring, large-scale code migration.

- SRE workflows: enable rapid root cause analysis, incident response, and change impact assessment by indexing infrastructure-as-code, deployment scripts, and config files for semantic search and lineage tracking.

- Automatically generate design documentation from code—keep design docs up-to-date.

Flow Overview

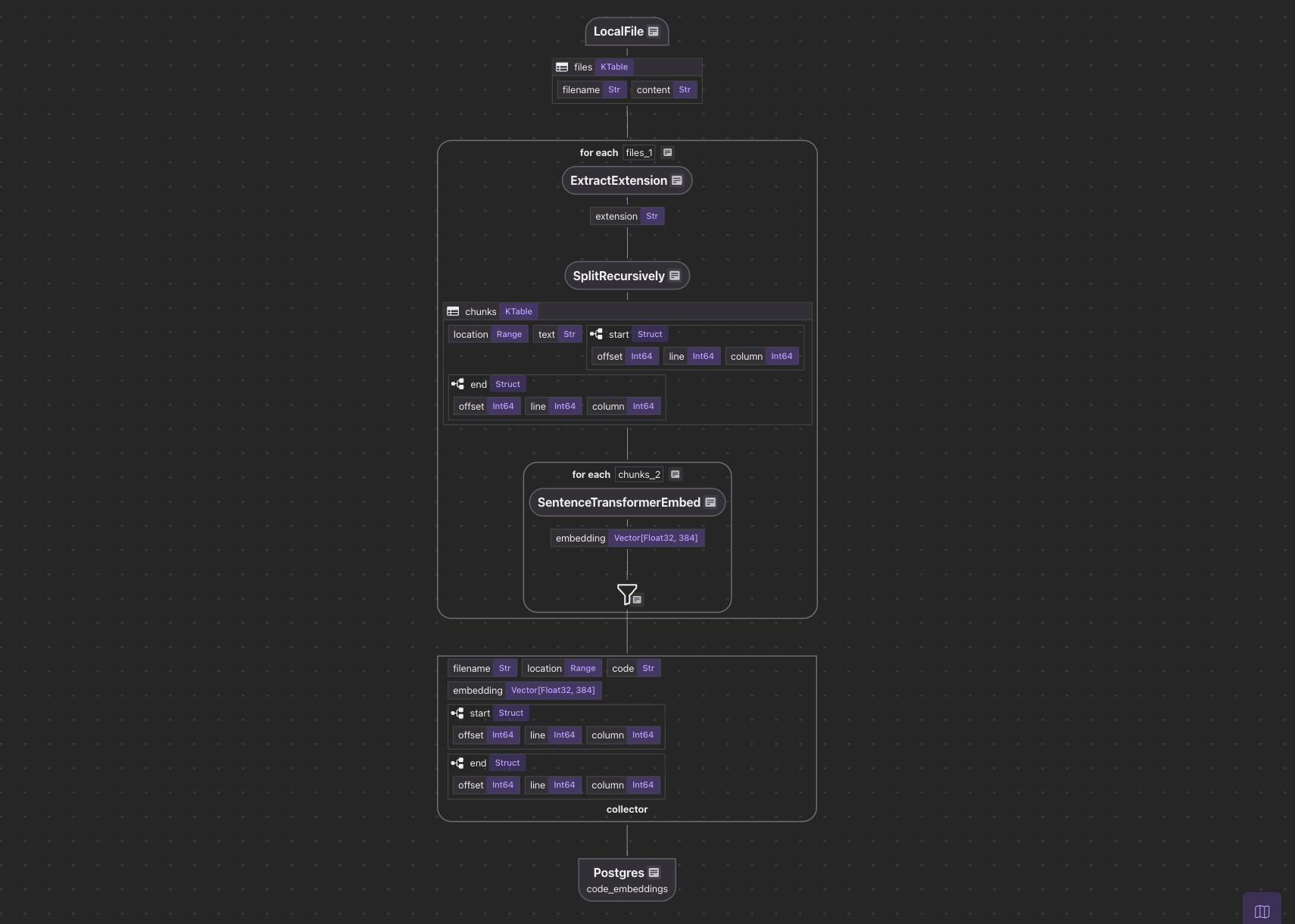

The flow is composed of the following steps:

- Read code files from the local filesystem

- Extract file extensions, to get the language of the code for Tree-sitter to parse

- Split code into semantic chunks using Tree-sitter

- Generate embeddings for each chunk

- Store in a vector database for retrieval

Setup

-

Install Postgres, follow installation guide.

-

Install CocoIndex

pip install -U cocoindex

Add the codebase as a source

We will index the CocoIndex codebase. Here we use the LocalFile source to ingest files from the CocoIndex codebase root directory.

import os

@cocoindex.flow_def(name="CodeEmbedding")

def code_embedding_flow(flow_builder: cocoindex.FlowBuilder, data_scope: cocoindex.DataScope):

data_scope["files"] = flow_builder.add_source(

cocoindex.sources.LocalFile(path=os.path.join('..', '..'),

included_patterns=["*.py", "*.rs", "*.toml", "*.md", "*.mdx"],

excluded_patterns=[".*", "target", "**/node_modules"]))

code_embeddings = data_scope.add_collector()

- Include files with the extensions of

.py,.rs,.toml,.md,.mdx - Exclude files and directories starting

.,targetin the root andnode_modulesunder any directory.

flow_builder.add_source will create a table with sub fields (filename, content).

Process each file and collect the information

Extract the extension of a filename

We need to pass the language (or extension) to Tree-sitter to parse the code. Let's define a function to extract the extension of a filename while processing each file.

@cocoindex.op.function()

def extract_extension(filename: str) -> str:

"""Extract the extension of a filename."""

return os.path.splitext(filename)[1]

Split the file into chunks

We use the SplitRecursively function to split the file into chunks. SplitRecursively is CocoIndex building block, with native integration with Tree-sitter. You need to pass in the language to the language parameter if you are processing code.

with data_scope["files"].row() as file:

# Extract the extension of the filename.

file["extension"] = file["filename"].transform(extract_extension)

file["chunks"] = file["content"].transform(

cocoindex.functions.SplitRecursively(),

language=file["extension"], chunk_size=1000, chunk_overlap=300)

Embed the chunks

We use SentenceTransformerEmbed to embed the chunks.

@cocoindex.transform_flow()

def code_to_embedding(text: cocoindex.DataSlice[str]) -> cocoindex.DataSlice[list[float]]:

return text.transform(

cocoindex.functions.SentenceTransformerEmbed(

model="sentence-transformers/all-MiniLM-L6-v2"))

@cocoindex.transform_flow() is needed to share the transformation across indexing and query. When building a vector index and querying against it, the embedding computation must remain consistent between indexing and querying.

Then for each chunk, we will embed it using the code_to_embedding function, and collect the embeddings to the code_embeddings collector.

with data_scope["files"].row() as file:

with file["chunks"].row() as chunk:

chunk["embedding"] = chunk["text"].call(code_to_embedding)

code_embeddings.collect(filename=file["filename"], location=chunk["location"],

code=chunk["text"], embedding=chunk["embedding"])

Export the embeddings

code_embeddings.export(

"code_embeddings",

cocoindex.storages.Postgres(),

primary_key_fields=["filename", "location"],

vector_indexes=[cocoindex.VectorIndex("embedding", cocoindex.VectorSimilarityMetric.COSINE_SIMILARITY)])

We use Cosine Similarity to measure the similarity between the query and the indexed data.

Query the index

We match against user-provided text by a SQL query, reusing the embedding operation in the indexing flow.

def search(pool: ConnectionPool, query: str, top_k: int = 5):

# Get the table name, for the export target in the code_embedding_flow above.

table_name = cocoindex.utils.get_target_storage_default_name(code_embedding_flow, "code_embeddings")

# Evaluate the transform flow defined above with the input query, to get the embedding.

query_vector = code_to_embedding.eval(query)

# Run the query and get the results.

with pool.connection() as conn:

with conn.cursor() as cur:

cur.execute(f"""

SELECT filename, code, embedding <=> %s::vector AS distance

FROM {table_name} ORDER BY distance LIMIT %s

""", (query_vector, top_k))

return [

{"filename": row[0], "code": row[1], "score": 1.0 - row[2]}

for row in cur.fetchall()

]

Define a main function to run the query in terminal.

def main():

# Initialize the database connection pool.

pool = ConnectionPool(os.getenv("COCOINDEX_DATABASE_URL"))

# Run queries in a loop to demonstrate the query capabilities.

while True:

try:

query = input("Enter search query (or Enter to quit): ")

if query == '':

break

# Run the query function with the database connection pool and the query.

results = search(pool, query)

print("\nSearch results:")

for result in results:

print(f"[{result['score']:.3f}] {result['filename']}")

print(f" {result['code']}")

print("---")

print()

except KeyboardInterrupt:

break

if __name__ == "__main__":

main()

Run the index setup & update

-

Install dependencies

pip install -e . -

Setup and update the index

cocoindex update mainYou'll see the index updates state in the terminal

Test the query

At this point, you can start the CocoIndex server and develop your RAG runtime against the data. To test your index, you could

python main.py

When you see the prompt, you can enter your search query. for example: spec. The returned results - each entry contains score (Cosine Similarity), filename, and the code snippet that get matched.

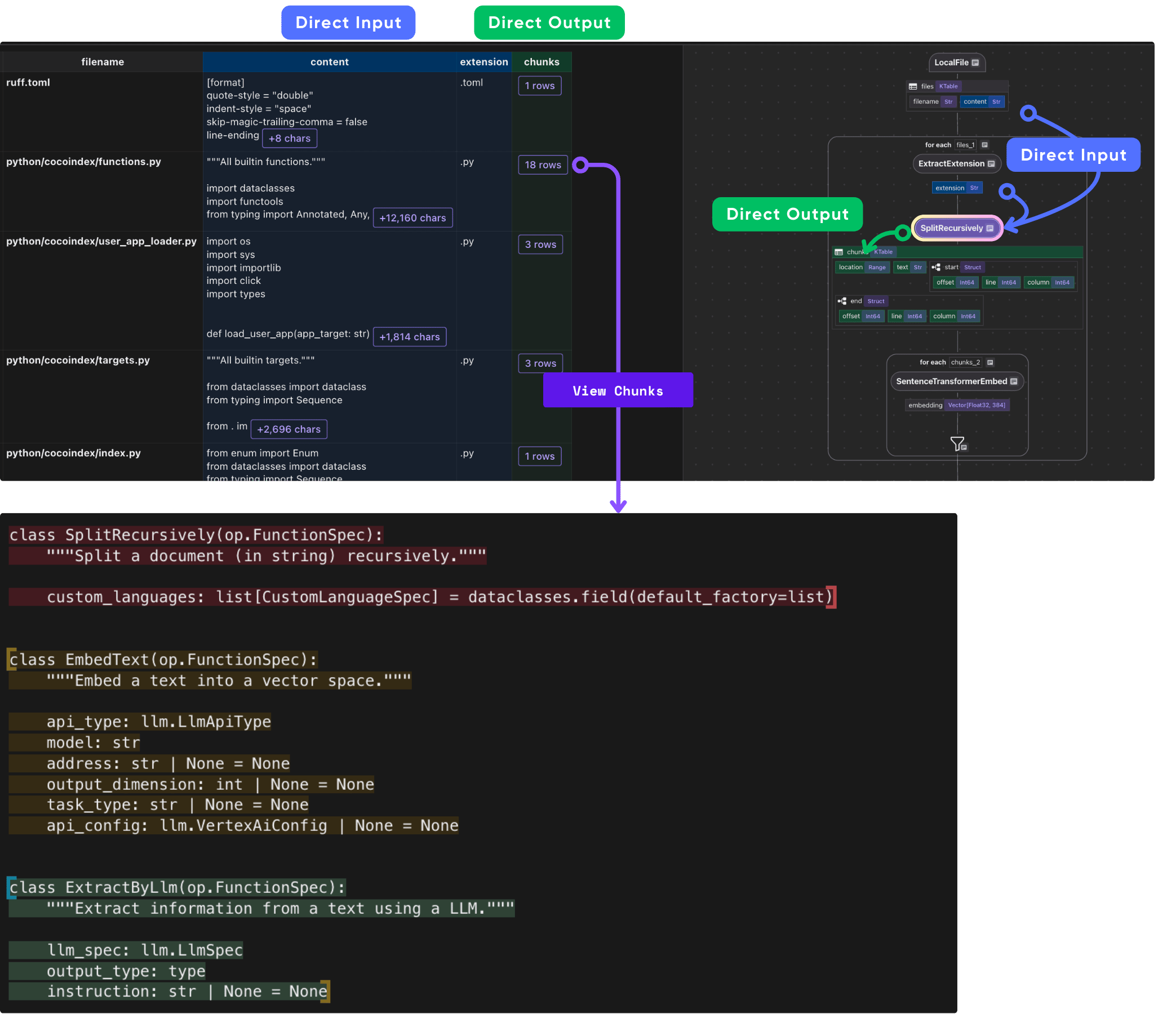

CocoInsight

To get a better understanding of the indexing flow, you can use CocoInsight to help the development step by step. To spin up, it is super easy.

cocoindex server -ci main

Follow the url from the terminal - https://cocoindex.io/cocoinsight to access the CocoInsight.

Supported Languages

SplitRecursively has native support for all major programming languages.

Supported Languages