Index Images with ColPali: Multi-Modal Context Engineering

We’re excited to announce that CocoIndex now supports native integration with ColPali — enabling multi-vector, patch-level image indexing using cutting-edge multimodal models.

With just a few lines of code, you can now embed and index images with ColPali’s late-interaction architecture, fully integrated into CocoIndex’s composable flow system.

Star CocoIndex on GitHub if you like it!

Why ColPali for indexing?

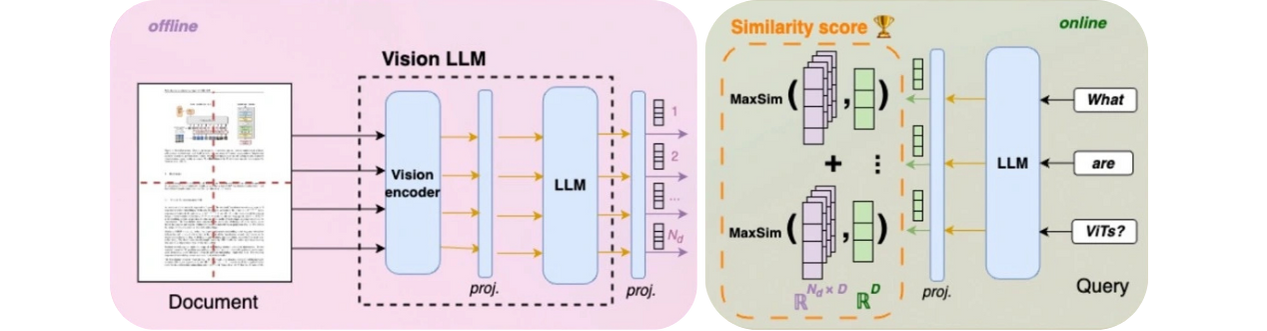

ColPali (Contextual Late-interaction over Patches) is a powerful model for multimodal retrieval.

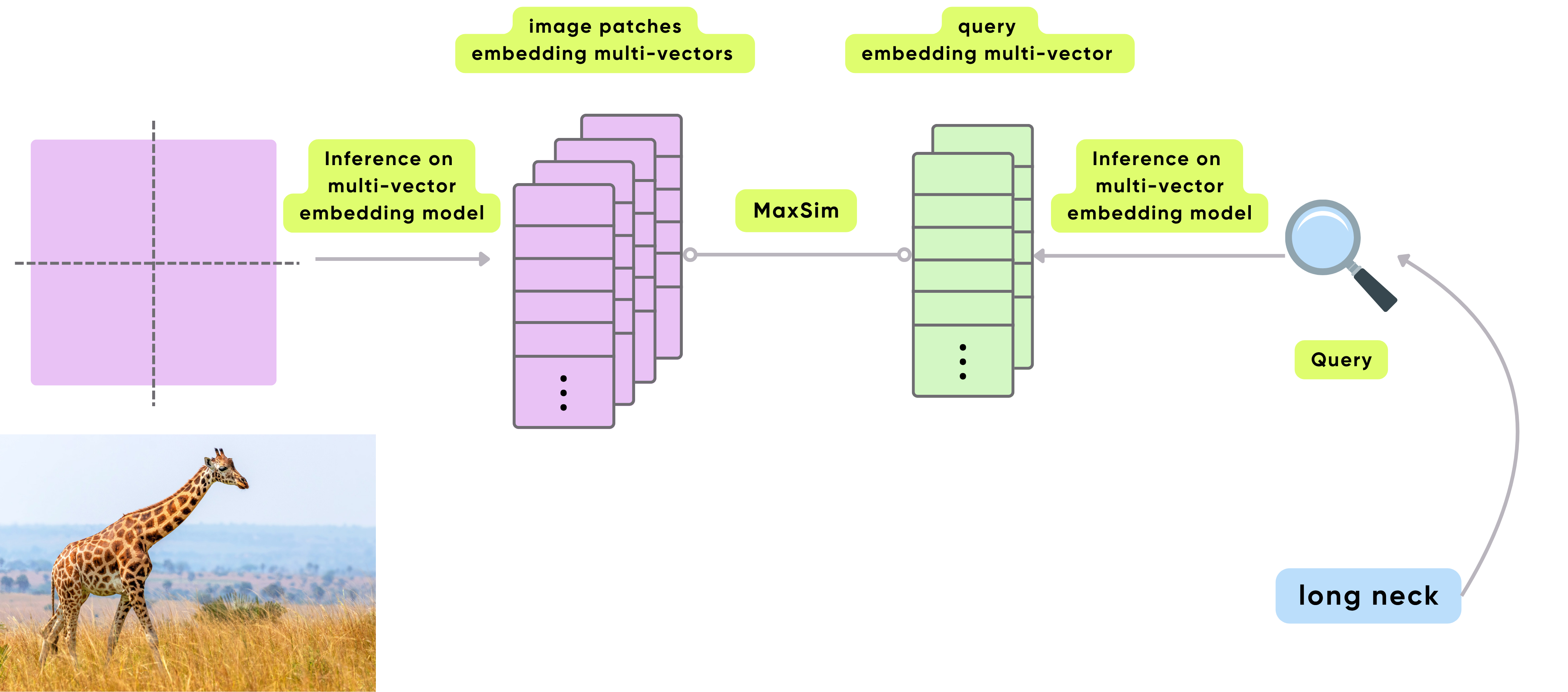

It fundamentally rethinks how documents — especially visually complex or image-rich ones — are represented and searched. Instead of reducing each image or page to a single dense vector (as in traditional bi-encoders), ColPali breaks an image into many smaller patches, preserving local spatial and semantic structure. Each patch receives its own embedding, which together form a multi-vector representation of the complete document.

Colpali's Architecture - Source: ColPali

- Fine-Grained Visual Search:

- Each image is split into a grid (commonly 32x32, generating 1,024 patches per page), and every patch is embedded with contextual awareness of both visual and textual cues.

- During search, user queries are also broken down into token embeddings, allowing matching of specific textual tokens to the most relevant image patches. This supports matching at a much finer spatial and semantic granularity than single-vector models.

- Preservation of Spatial and Semantic Structure:

- Traditional methods collapse document images into global vectors, losing vital layout and region-based context. ColPali’s patch embeddings retain spatial relationships and can localize query matches (e.g., finding a diagram in a manual or a table on a form), making the search results more accurate and interpretable.

- High Recall Across Object-Rich Scenes:

- Scenes with multiple objects, dense text, graphics, or mixed content benefit because the model does not “forget” small but important regions. Each patch is individually considered, reducing the likelihood of missing relevant information even in visually cluttered pages.

- Advanced Search Strategies (Late Interaction & MaxSim):

- ColPali leverages the late interaction (LI) paradigm, inspired by ColBERT, where query tokens are compared against all patch embeddings from a document. The MaxSim operation keeps only the maximum similarity score for each query token, then sums these for the final relevance score. This enables precise, interpretable, and efficient retrieval, handling both large-scale searches and nuanced queries.

- The use of LI also reduces the computational burden at query time by avoiding up-front dense cross-attention or joint encoding, making retrieval both fast and accurate.

- Bypassing Traditional OCR Pipelines:

- Because images are processed natively, there is no need for error-prone text extraction or segmentation steps, boosting speed and end-to-end efficiency. This approach can also capture visual elements that OCR skips, such as charts, drawings, or logos.

- Scalability and Storage Efficiency:

- Compression schemes such as quantization and pruning (Hierarchical Patch Compression, HPC-ColPali) can further shrink storage needs and speed up similarity computation, allowing ColPali-based systems to scale to billions of documents or on-device retrieval without losing accuracy.

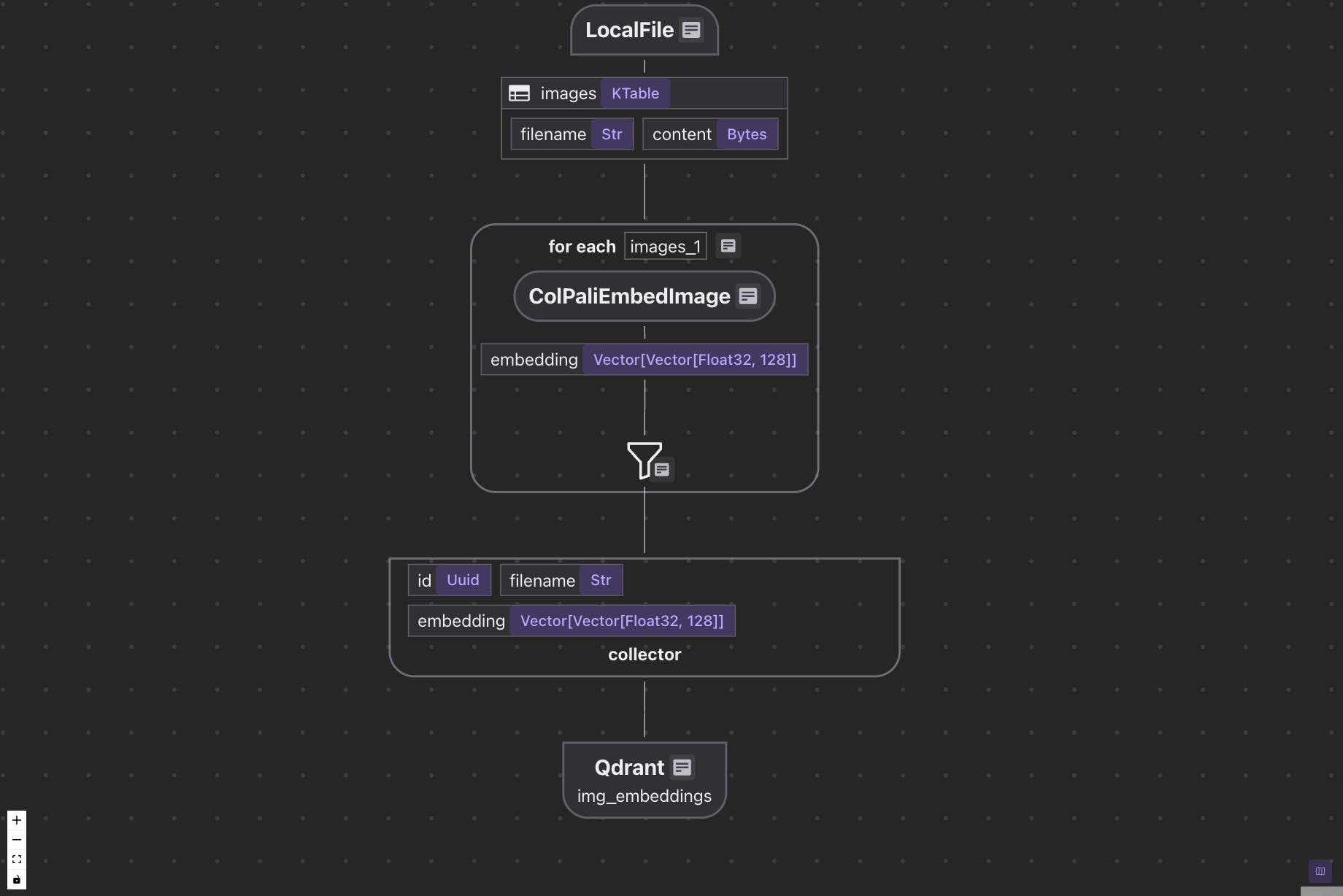

Declare an image indexing flow with CocoIndex and Qdrant

In this example, we will use CocoIndex to index images with ColPali, and Qdrant to store and retrieve the embeddings.

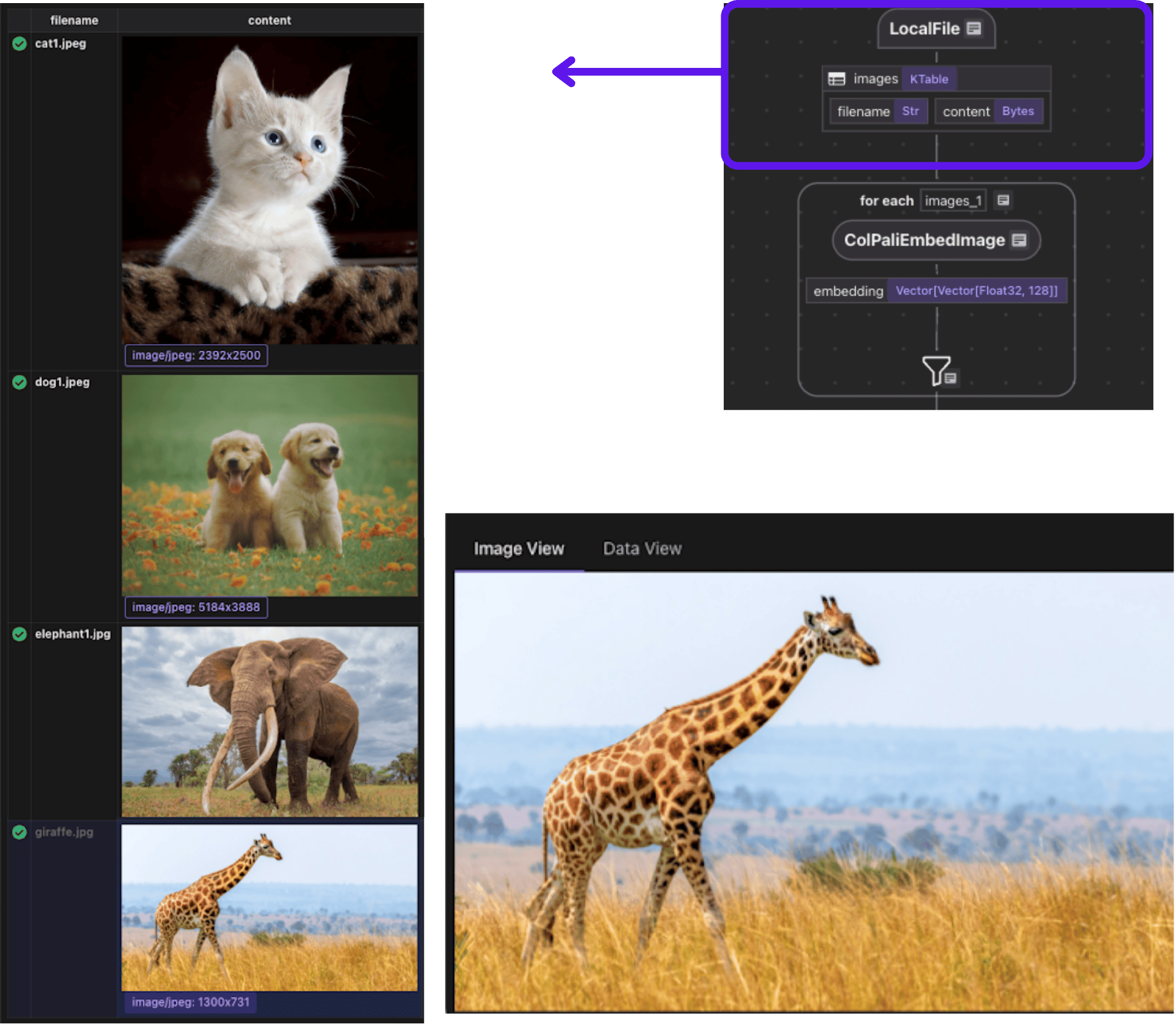

This flow illustrates how we’ll process and index images using ColPali:

- Ingest image files from the local filesystem

- Use ColPali to embed each image into patch-level multi-vectors

- Optionally extract image captions using an LLM

- Export the embeddings (and optional captions) to a Qdrant collection

Check out the full working code here.

⭐ Star CocoIndex on GitHub if you like it!

1. Ingest the images

We start by defining a flow to read .jpg, .jpeg, and .png files from a local directory using LocalFile.

@cocoindex.flow_def(name="ImageObjectEmbeddingColpali")

def image_object_embedding_flow(flow_builder, data_scope):

data_scope["images"] = flow_builder.add_source(

cocoindex.sources.LocalFile(

path="img",

included_patterns=["*.jpg", "*.jpeg", "*.png"],

binary=True

),

refresh_interval=datetime.timedelta(minutes=1),

)

The add_source function sets up a table with fields like filename and content. Images are automatically re-scanned every minute.

2. Process each image and collect the embedding

2.1 Embed the image with ColPali

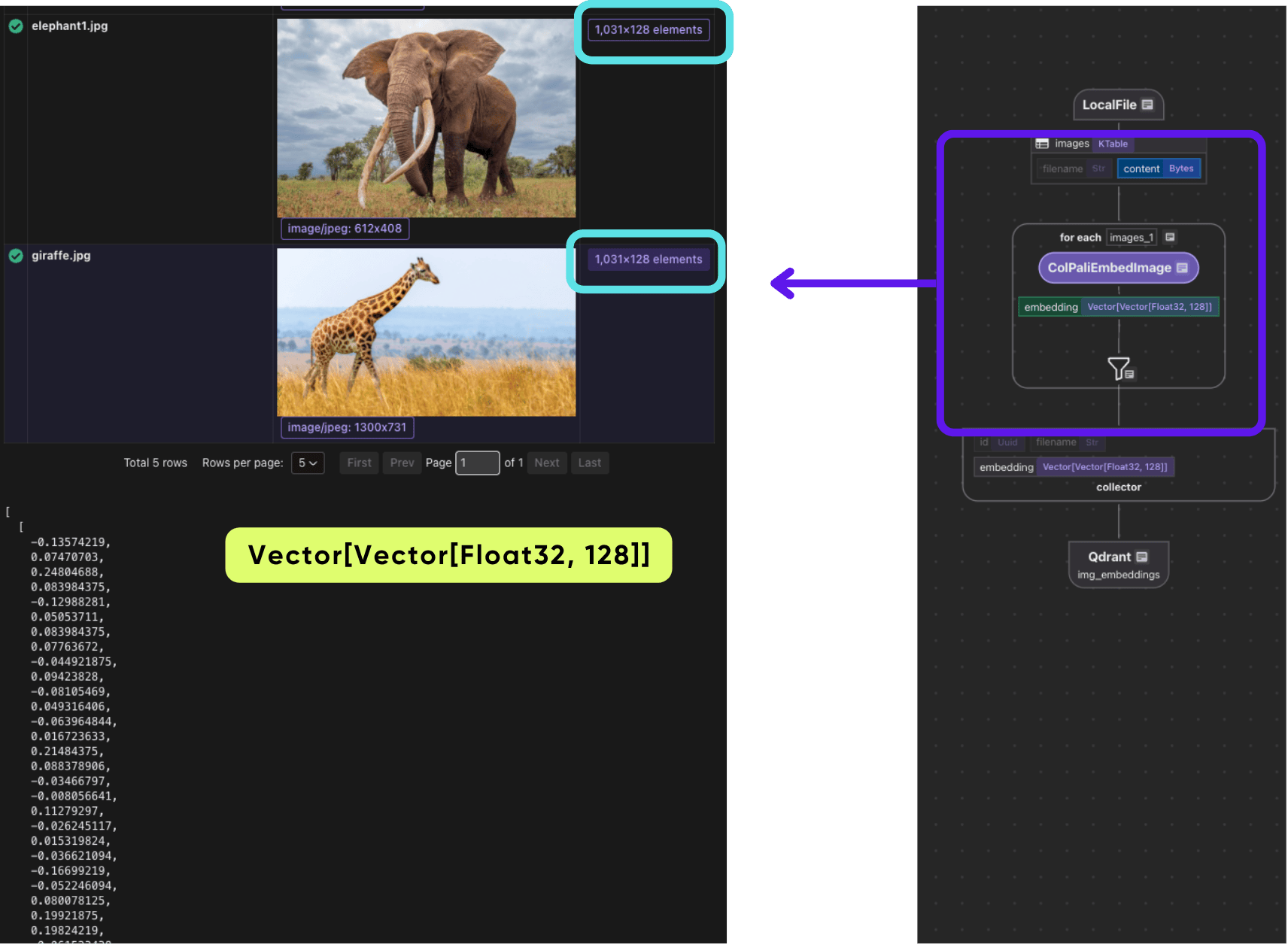

We use CocoIndex's built-in ColPaliEmbedImage function, which returns a multi-vector representation for each image. Each patch receives its own vector, preserving spatial and semantic information.

img_embeddings = data_scope.add_collector()

with data_scope["images"].row() as img:

img["embedding"] = img["content"].transform(cocoindex.functions.ColPaliEmbedImage(model="vidore/colpali-v1.2"))

This transformation turns the raw image bytes into a list of vectors — one per patch — that can later be used for late interaction search.

3. Collect and export the embeddings

Once we’ve processed each image, we collect its metadata and embedding and send it to Qdrant.

collect_fields = {

"id": cocoindex.GeneratedField.UUID,

"filename": img["filename"],

"embedding": img["embedding"],

}

img_embeddings.collect(**collect_fields)

Then we export to Qdrant using the Qdrant target:

img_embeddings.export(

"img_embeddings",

cocoindex.targets.Qdrant(collection_name="ImageSearchColpali"),

primary_key_fields=["id"],

)

This creates a vector collection in Qdrant that supports multi-vector fields — required for ColPali-style late interaction search.

4. Enable real-time indexing

To keep the image index up to date automatically, we wrap the flow in a FlowLiveUpdater:

@asynccontextmanager

async def lifespan(app: FastAPI):

load_dotenv()

cocoindex.init()

image_object_embedding_flow.setup(report_to_stdout=True)

app.state.live_updater = cocoindex.FlowLiveUpdater(image_object_embedding_flow)

app.state.live_updater.start()

yield

This keeps your vector index fresh as new images arrive.

What's actually stored?

Unlike typical image search pipelines that store one global vector per image, ColPali stores:

Vector[Vector[Float32, N]]

Where:

- The outer dimension is the number of patches

- The inner dimension is the model’s hidden size

This makes the index multi-vector ready, and compatible with late-interaction query strategies — like MaxSim or learned fusion.

Real-time indexing with Live Updater

You can also attach CocoIndex’s FlowLiveUpdater to your FastAPI or any Python app to keep your ColPali index synced in real time:

from fastapi import FastAPI

from contextlib import asynccontextmanager

@asynccontextmanager

async def lifespan(app: FastAPI):

load_dotenv()

cocoindex.init()

image_object_embedding_flow.setup(report_to_stdout=True)

app.state.live_updater = cocoindex.FlowLiveUpdater(image_object_embedding_flow)

app.state.live_updater.start()

yield

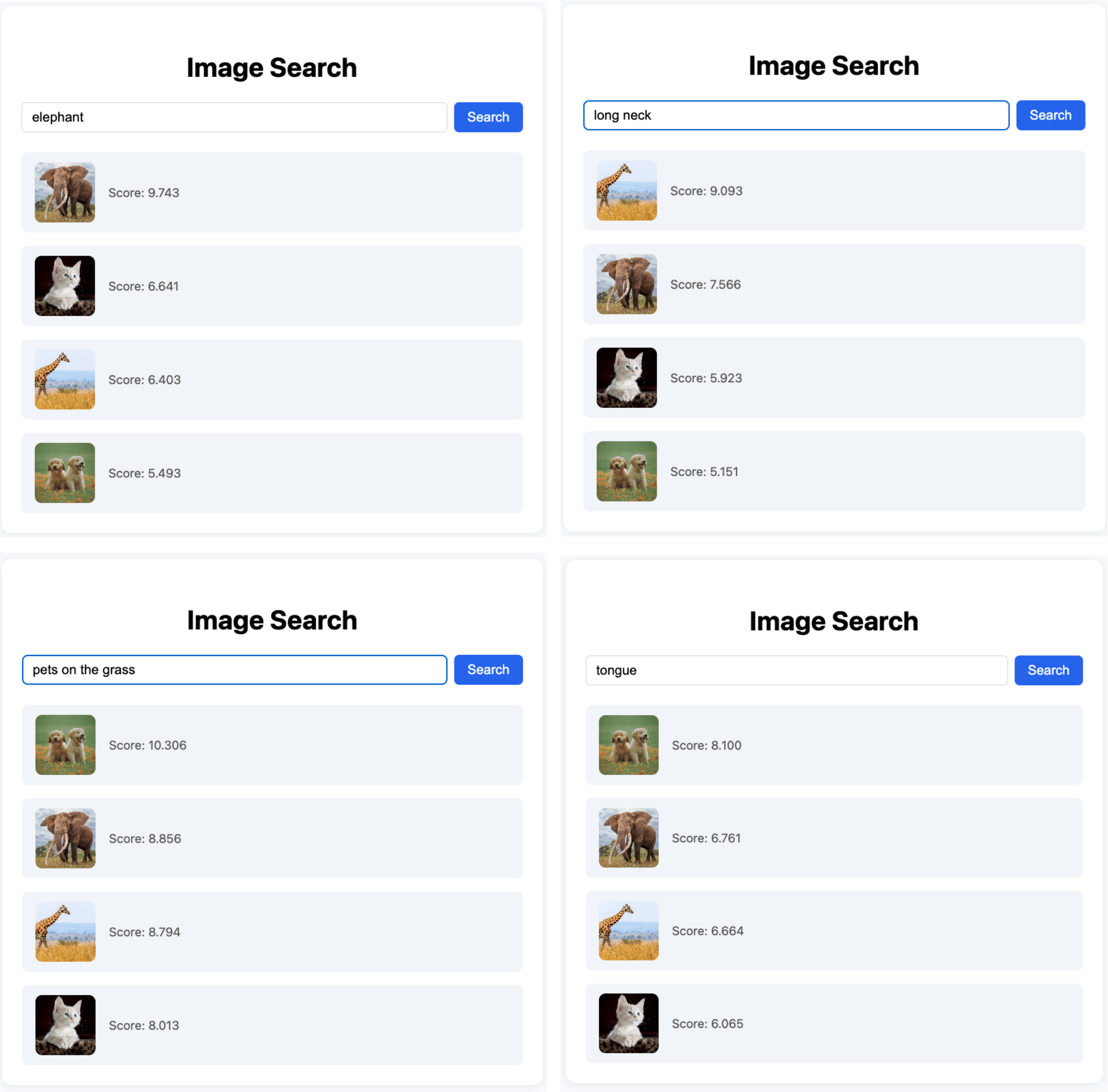

Retrivel and application

Refer to this example on Query and application building: https://cocoindex.io/blogs/live-image-search#3-query-the-index

Make sure we use ColPali to embed the query

@app.get("/search")

def search(

q: str = Query(..., description="Search query"),

limit: int = Query(5, description="Number of results"),

) -> Any:

# Get the multi-vector embedding for the query

query_embedding = text_to_colpali_embedding.eval(q)

Full working code is available here.

Check it out for yourself! It is fun :) In this image search example, the results look better compared to using CLIP with a single dense vector (1D embedding). ColPali produces richer and more fine-grained retrieval.

Built with flexibility in mind

Whether you’re working on:

- Visual RAG

- Multimodal retrieval systems

- Fine-grained visual search tools

- Or want to bring image understanding to your AI agent workflows

CocoIndex + ColPali gives you a modular, modern foundation to build from.

Connect to any data source — and keep it in sync

One of CocoIndex’s core strengths is its ability to connect to your existing data sources and automatically keep your index fresh. Beyond local files, CocoIndex natively supports source connectors including:

- Google Drive

- Amazon S3 / SQS

- Azure Blob Storage

See documentation here.

Once connected, CocoIndex continuously watches for changes — new uploads, updates, or deletions — and applies them to your index in real time.

Support us

We’re constantly adding more examples and improving our runtime. If you found this helpful, please ⭐ star CocoIndex on GitHub and share it with others.